Augmented Reality Could Change Health Care—Or Be a Faddish Dud

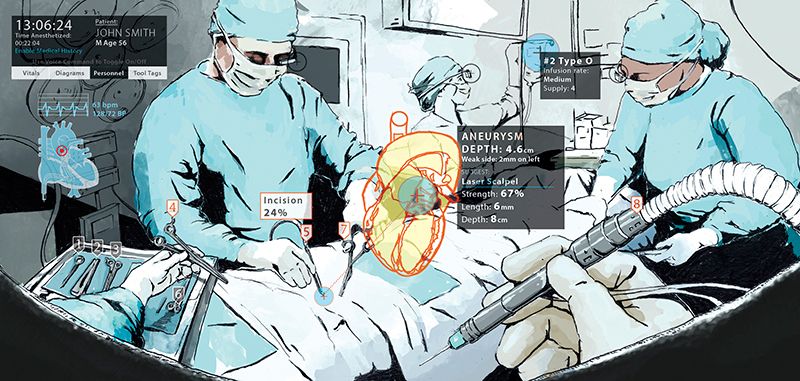

Doctors and engineers at the University of Maryland team up to build a tool that projects images and vital information right above a patient

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/3e/98/3e98718b-4454-414e-a1db-0d82f6051855/ultrasound1.jpg)

The patient lies on the exam table, as the surgeon gets ready. She's wearing pastel pink scrubs, holding an ultrasound device, and wearing glasses that look like something out of RoboCop—the '80s version, not the 2014 remake.

The surgeon presses the ultrasound device to the patient's chest, examining his heart. The ultrasound image appears on a laptop screen behind her, but she never turns her head, because she can see the lub-dub, lub-dub of the beating heart right in front of her eyes.

Ok, so the scenario is fake—a demonstration—but the technology, albeit a prototype, is real. Engineers at the University of Maryland's "Augmentarium," a virtual and augmented reality research lab on its College Park campus, designed the tool in concert with doctors from the University of Maryland Medical Center's Shock Trauma Center. The doctors and researchers building this tool—a way to project images or vital information right where a doctor needs it—believe that it will make surgery safer, patients happier, and medical students better.

But there are a number of questions that must be answered before you'll see your own doctor wearing an augmented reality headset.

***

Augmented reality refers to any technology that overlays computer-generated images onto images of the real world. Google Glass is an example of an augmented reality technology. So is the mobile game Pokémon Go.

Most AR in use now is for entertainment purposes, but that is slowly changing. Factories use Google Glass to do quality checks. Caterpillar maintenance crews use AR tablet apps to pull up custom manuals. And, perhaps soon, doctors will use AR to improve patient care.

Sarah Murthi is an associate professor at the University of Maryland School of Medicine, a trauma surgeon at the university’s R Adams Cowley Shock Trauma Center, and director of Critical Care Ultrasound. She and Amitabh Varshney, director of the Augmentarium, are working together to create the AR headset.

The tool, which is in such early stages it doesn't have a catchy name, uses an off-the-shelf Microsoft HoloLens and custom software so that a doctor can see images from an ultrasound or from another diagnostic device. (They've also tested it with a GlideScope, a device used for opening a patient's airway so she or he can be put on a ventilator during surgery.) Augmentarium researchers also created voice commands so that the user can control the image hands free.

What this does, according to Murthi and Caron Hong, an associate professor of anesthesiology and a critical care anesthesiologist, is nothing short of revolutionary.

Normally, to view an ultrasound, a doctor needs to look away from the patient and at a screen. "It actually is hard to look away," Murthi says. "Often the screen is not ideally positioned [in the OR], a lot's going on. The screen may be several feet away and off to the side."

Not only does the device improve a doctor's reaction times, she says, it's better for patients.

"People don't like their doctor to look at computers," she says. "It's better for patients if somebody's looking at you." Later, in a separate interview, she added, "I think ultimately all of us hope that this will bring back more of the humanitarian component to the patient-physician relationship."

On the other hand, when Hong is intubating patients, they're often already sedated, so she doesn't need to worry about her bedside manner. But the goggles will improve her work too, she says. She sees a powerful advantage in combining more than one data source into her goggles. "In the critical care arena, where having to turn around and look at vital signs while I'm intubating and giving drugs [takes time], if I had a very convenient, light, holographic monitor that could show me the vital signs in one screen and show me [the patient's] airway in another, it could actually make things a lot more efficient for taking care of patients." She argues that the system Murthi and Varshney are building is so intuitive to use that doctors, who are already used to filtering information from multiple sources, could handle three streams of incoming information on their goggles at once.

***

Not everyone sees AR as the future of medicine.

Henry Feldman, chief information architect and hospitalist at Harvard Medical Faculty Physicians, says most doctors already have enough information at their fingertips.

It's not that he's a Luddite; he was actually one of the first doctors (possibly the first) to use an iPad. Apple made a promotional movie about him. But augmented reality? Doesn't make as much sense, he says. Surgeons don't need a live play-by-play of every moment of a patient's vital signs, for the same reason that your primary care physician probably doesn't want you to print out and hand over a year of your Fitbit data. Your doctor would rather see the long-term trend, and a surgeon, Feldman says, would probably rather have the high-level overview, and trust a nurse to point out any deviations from the norm.

Plus, there's the distraction factor.

"If I'm the patient, I'd rather my surgeon not have sparkly stuff in his vision,” says Feldman. "I'm sure there are fields where it's super important, but they're going to be rare and very specific surgeries."

In fact, the "sparkly stuff" problem is one still waiting to be solved. Studies of similar interfaces on drivers have found that presenting drivers with too much information is distracting, possibly worse than giving them no information at all. This information overload could be one of the reasons Google Glass failed on the consumer market. "Alarm fatigue" in hospitals causes medical staff to miss critical alarms, unable to filter the important signal from the noise. Could that happen with AR goggles?

That's one of the questions Murthi and Varshney are hoping to answer. Murthi herself has worn the device and tested it with volunteer "patients," but it hasn't been used in a real-life clinical care setting yet. They're looking at testing the goggles on med students, to see if they can adapt to using the system—and if it actually makes them better at their jobs. They're hoping the hardware can get smaller and lighter.

To Murthi, Varshney and Hong, this is just the beginning. The tool could be used to teach students, letting them see what the doctor sees (or letting a doctor see what a student is seeing). It could be used with remote medicine, so an expert in a hospital thousands of miles away could see through a local doctor or battlefield medic's eyes.

"That we have such an interface to even fathom the thought that we could actually do this" is amazing, Hong says.

"Medicine is not very technical, on some level," Murthi says. This headset might just change that.