Human Beats AI in a Drone Race, But Just Barely

In the long term, bet on the machine.

The human-versus-robot races have just begun, and so far the humans are ahead—but only by the thinnest of margins. In October, inside a warehouse at NASA’s Jet Propulsion Laboratory (JPL), a new vision-based navigation system was put through its paces in a race against the clock using racing-style quadcopters. The contest pitted a human pilot, Ken Loo from the Drone Racing League, against an aircraft controlled by a JPL-designed artificial intelligence program, assisted by Google's Tango software, which when combined allowed the JPL aircraft to fly autonomously.

The AI software used multiple cameras to figure out where it was, creating highly detailed internal maps of anything it sees, which is useful for augmented reality, among other things.

While human pilot Loo needed multiple practice laps to become familiar with the race course, JPL’s AI-equipped quadcopter only needed one, because it recorded the course with its machine-vision cameras. The data was then used to build a map that JPL engineers used to chart a flight path for the drone.

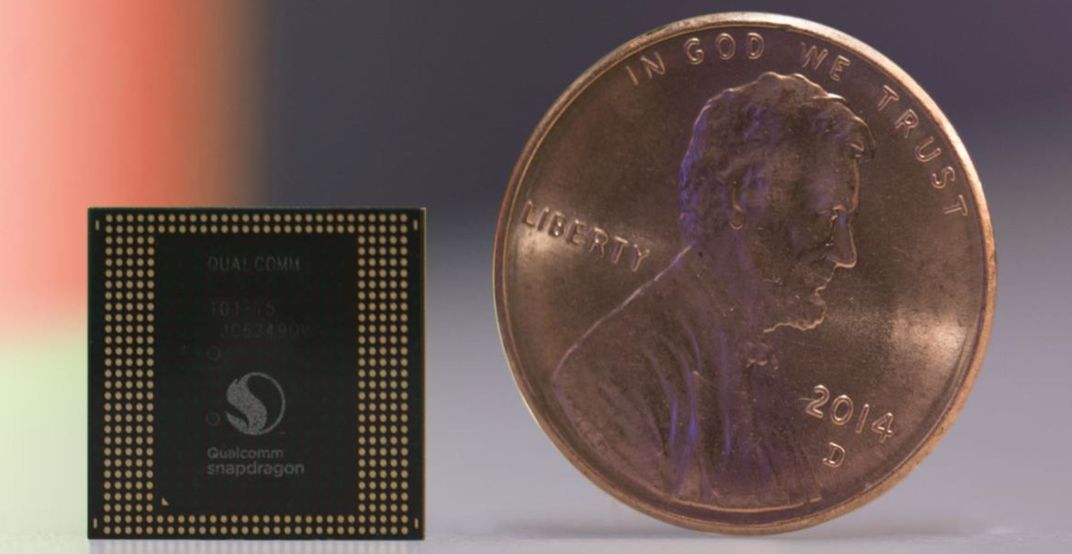

By using forward-looking and downward-looking cameras to measure its progress along the course map, the JPL quadcopter proved able to determine its orientation and location without the benefit of GPS or human guidance, using only the processor driving Tango, Qualcomm’s Snapdragon.

Although smaller than a penny, the Snapdragon “system-on-a-chip” can simultaneously handle WiFi, GPS, cell signals, video and audio. But GPS signals are so weak that they typically don’t work indoors, and are not pinpoint-accurate enough to safely steer vehicles along complex routes. That means most GPS-guided vehicles have other sensors on them, machine vision-capable cameras being just one option. (Self-driving cars generally use other means, such as radar or lidar.)

The human-vs-AI race wasn’t just for fun; inexpensive self-navigating drones could have a significant impact on industry. One can imagine an AI-equipped drone zipping through a massive warehouse on its way to a specific package, for example a bag of coffee. Using machine vision, the drone could quickly figure out where the coffee is, pick out which bag it needs, transport it to an appropriately sized box, and send it on its way to the customer, avoiding other drones the whole time. With enough battery power, the drone could exit the warehouse and fly its cargo to a coffee shop, a home, another warehouse, or a truck parked alongside a road. And there’s no reason why the delivery process couldn't be reversed. With Snapdragon's support for WiFi and cellular phone service, the drone's mission and ultimate destination could be updated in flight.

By the way, if you’re interested in the outcome of the race: Loo won with the fastest average lap, after he’d learned the course well, finishing the course in 11.1 seconds versus 13.9 seconds for the AI. But nobody expects it to take long for AI to come out ahead. Humans get tired, and their brains aren’t improving. Algorithms don’t tire, and are constantly being improved. If you have to put money on the result, bet on the drone.

Correction 12/13: JPL wrote the relevant artificial intelligence program, which makes use of but is separate from Google’s Tango software. The corrected version is above; We apologise for the error.