The Key to Future Mars Exploration? Precision Landing

The science team on NASA’s next expedition to the Red Planet knows exactly where to go.

:focal(922x732:923x733)/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/b9/97/b997e0ec-fe25-4e7a-aa6a-5f9f6ca16810/marslanding.jpg)

Katie Stack Morgan, deputy project scientist for NASA’s Mars 2020 mission, pulls up a photo of where the rover is headed: the western rim of Jezero Crater. Billions of years ago, a river filled that basin, creating a delta and what is today a dry lakebed. It’s an exciting destination, if, like the science team at NASA’s Jet Propulsion Laboratory (JPL), you’re on a particular quest. “That is a great place to go and search for life,” she says.

There’s a neon green ring on the image too: That’s the ellipse encompassing all the spots their rover might land. Most of the ring lies in the lakebed, but because Mars landing is still not an exact science, it also includes more treacherous terrain, like the rocky delta and a bit of the crater rim, as much as a kilometer high.

Tracing a finger on the computer screen, Stack Morgan maps her desired path. Ideally, they’ll land in the flats and drive uphill, so scientists can “read the rocks” from oldest to youngest. It’s like reading a book, she says: Skipping to the end might be satisfying, but context is what makes it most exciting.

First stop: the delta. “That’s the juicy spot,” she says, because it would have built up lake sediments and perhaps organic compounds. Scientists think life would develop—and fossilize—in quiet, nutrient-rich water, away from the tumult of a river.

Then they would climb to the “bathtub ring,” the crater’s inner margin, which may have had warm, shallow waters—another good place to look for life. Finally, they would ascend to check out the very oldest rocks in the crater wall, caching rocks along the way for a future sample-return mission. “When those samples come back,” she says, “they are going to feed a generation of Mars scientists.” (See “Return from a Martian Crater,” Oct./Nov. 2019.)

But for Allen Chen, the mission’s entry, descent, and landing lead, this will require some delicate footwork. “While the scientists love things like cliffs and scarps and rocks—those are the science targets for them—that’s death for me,” he says. Jezero is full of the dangers he would most like to avoid: rocks (too sharp), slopes (a rollover risk), and meter-high dunes the team calls “inescapable hazards” (sand traps).

Therein lies the trade-off that has bedeviled both Mars and moon landings since the very beginning: Landing on an open plain is safer, but scientifically kind of dull. On past missions, Chen says, NASA wanted each site “to be a parking lot.”

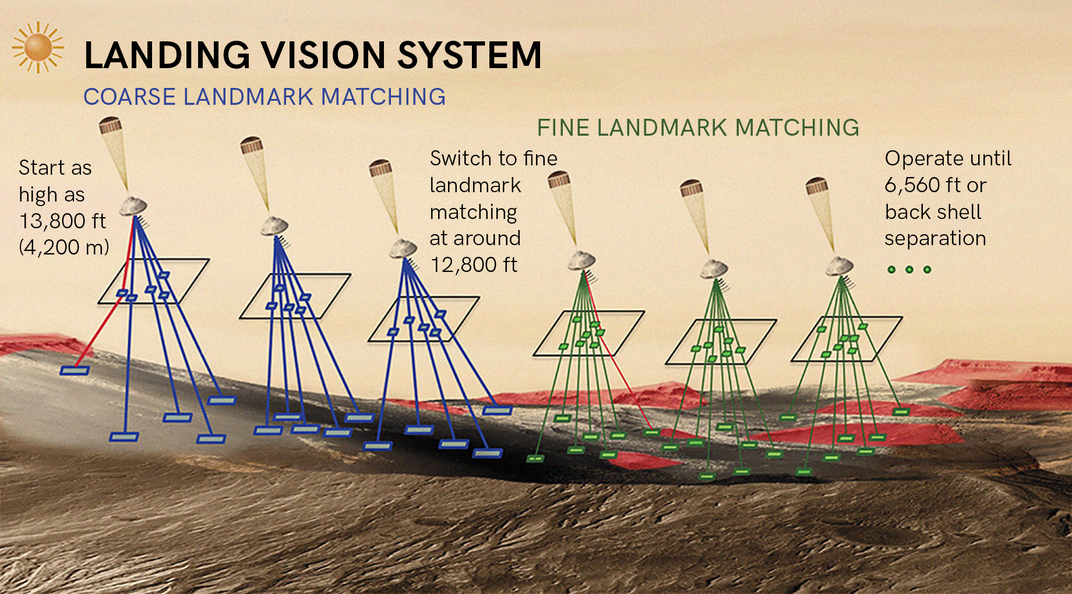

To turn Jezero, once a no-go, into a landing pad, the team is relying on terrain-relative navigation, or TRN. The technology, which JPL researchers have been working on since 2004, enables more precise landings by giving the vehicle a visual landing system. Using a camera, it scans the ground for landmarks, compares those images to onboard maps, and estimates its position. Tie this to related advances in hazard detection and avoidance, and now they have a better shot at not landing in a sand trap.

With better precision, says Chen, they can tolerate more hazards in the ellipse, which can also be smaller, and closer to the juicy spots Stack Morgan wants to study. Ultimately, Chen says, TRN means “we can be near those science targets—those death hazards to me—and not have to drive years to get there.” Just like on Earth, drive time means fuel and money. And you don’t want to know how much it costs to drive in space.

Before the rover can roll, the spacecraft must first survive a descent, called, since the 2012 Mars Curiosity rover landing, the “seven minutes of terror.” After speeding through the Martian atmosphere, an enormous parachute inflates to slow entry, the heat shield separates from the capsule, and the rocket-powered “sky crane” hovers to lower the rover, wheels down, before blowing the cables and rocketing away to crash somewhere else.

For most of this period, the vehicle won’t have detailed information about where it is. Mars missions, Chen says, rely on NASA’s Earth-based Deep Space Network until the end of the cruise phase. He compares it to driving while looking in the rearview mirror.

But once entry begins, the vehicle navigates by dead reckoning. It’s got an inertial measurement unit (IMU) that tracks acceleration and angular rates, but no visual way to scan for landmarks. “At that point the vehicle doesn’t have a way to figure out where it is,” says Chen. “It’s still buttoned up in its aeroshell and we’re about to go screaming into the atmosphere.”

Chen says it’s like asking a blindfolded person to find his way across a room based only on a description of how he was positioned in the doorway. “We tell the vehicle, ‘Well, when the bell goes off, we think you’re here, and you’re going in this direction, about this fast,’ ” he deadpans. “Good luck!”

With so much uncertainty, Chen says, after heat shield separation, “we could be off in our position in latitude and longitude space by up to about three kilometers. That’s not so great if you want to know where you are precisely, and dodge craters.”

The Apollo series of crewed missions to the moon had a solution: Give the astronauts a map and have them look out a window. But Mars 2020 is people-free, and the landing must unfurl without help from Mission Control, because there’s more than a 10-minute communications lag with Earth. Swati Mohan, lead guidance, navigation and control systems engineer for Mars 2020, describes landing this way: “Because of the delay, it’s already done it by the time you get the signal that it started.” TRN fills in this gap by giving the vehicle its own camera and map.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/47/94/4794cec1-b33b-47f1-9bcb-cbed91e17e8e/06m_fm2020_jezerocraterpia20549_live.jpg)

Mohan and Andrew Johnson, the Mars 2020 guidance, navigation, and control subsystem manager, have gowned up and are standing in a clean room in front of the Lander Vision System—the eyes and brain of the navigation technology. (They’ve recently finished 17 test flights over the Mojave, so this version is rigged up to fly on a helicopter.)

The system’s “eyes” are the landing camera. “It’s mounted onto the bottom of the rover, so it actually takes pictures of the terrain as we come down,” says Mohan, pointing at the left side of the rig. The brain—called the Vision Compute Element—processes those images. On Mars 2020, it will be nestled in the rover’s belly. Today, it’s sitting on a shelf, looking like an oversize hard drive.

This computer’s job is to swiftly compare the images of the approaching ground with onboard maps previously stitched together using photos shot by the Mars Reconnaissance Orbiter (MRO), which has been orbiting the planet since 2006. It correlates the two sets, looking for landmarks. But “landmarks” doesn’t mean rocks or craters. It means tiny pixel patterns, gradients of dark and light that are imperceptible to the human eye but can be identified by algorithm. Spotting these landmarks, while also using IMU data, will let the lander find its position on the map.

Practice shots at a Mars landing are vanishingly rare, so the team is testing their system using computer simulations that model parameters like trajectories, dust, and solar illumination levels. They are also flying their gear over the best Earth analogs they can locate. “It’s very hard to find things that look like Jezero Crater,” places as vast and treeless, says Johnson. They’ve ended up using desert mesas that are steeper and more rugged than Jezero, a way to over-train. Other than a bug that caused the system to reset when they flew too close to the edge of the map, he says, “It was a huge success.”

Specifically, Johnson says, they wanted to check that their estimated position would be off from the map by no more than 40 meters (about 130 feet). When they tested landing conditions similar to what they expect from Mars 2020, they beat their margin by half—the error was less than 20 meters. When they tested under more demanding conditions, like areas with high terrain relief or different illumination levels, they stayed within their original margin. And under the most challenging conditions of all, the system discarded estimates it considered unreliable and restarted its calculation. Johnson believes this is a good indicator that the system can recover if faced with unexpected conditions.

Meanwhile, on the other side of the JPL campus, scientists have been creating Jezero hazard maps, marking rocks they can see from orbit, and modeling smaller ones they can’t—plus those slopes and sand traps. They will use this to make a “safe targets map,” which breaks the terrain down into 10-meter-square “pixels,” each scored according to its hazard level. Before launch, they’ll load this onto the spacecraft.

The Lander Vision System will activate once the vehicle is about 4,200 meters above the surface. Once its back shell comes off at about 2,300 meters, the vehicle will use TRN data to gauge its position, and will autonomously select the safest nearby pixel from the targets map. Then, down it will come.

Mohan will be watching from Mission Control, and Johnson in the entry, descent, and landing war room, hoping to receive a tone—a specific radio frequency—indicating that the craft survived touchdown. Then, a camera mounted higher up on the vehicle will send back a photo. (The team is hoping to see ground and not sky, proof the vehicle’s not upside down or tilted.) By the time the MRO circles overhead to photograph the rover, NASA should know exactly where it landed.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/9e/9c/9e9c9c63-e5ff-42e5-9187-984418cea02a/06f_fm2020_mars2020descentstagelifted_live.jpg)

TRN is also being researched by private companies working to send spacecraft to the moon. Among them is Pittsburgh-based Astrobotic Technology, which is developing TRN for its Peregrine lander. Fourteen groups have purchased space on Peregrine to carry everything from scientific instruments to time capsules to personal mementos.

“Our business is to make space and the moon accessible to the world as a whole,” says Astrobotic principal research scientist Andrew Horchler. The company plans for Peregrine to fly on routine missions to many lunar sites, landing within 100 meters of each spot. “Terrain-relative navigation is an enabling technology for this,” says Horchler. “It will enhance the overall reliability of landing, the precision of the landing, and the actual spaces we can feasibly get to on the moon.”

The frame of the Peregrine lander looks a bit like a Space Age coffee table; it’s an elegant, aluminum-alloy, X-shaped structure atop four spindly legs, topped by a solar panel. Four payload tanks can be attached, one to each side of the craft. The tanks are shaped like golden pill capsules, and carry a combined 90 kilograms of experiments or supplies. The entire craft is 1.9 meters tall and 2.5 meters wide.

Astrobotic’s work on TRN received funds from NASA’s Tipping Point program, which awards money to bring commercial space technologies to market. Additionally, Peregrine’s planned 2021 mission to the moon’s Lacus Mortis crater is affiliated with NASA’s Commercial Lunar Payload Services (CLPS) program. This public-private partnership will deliver gear ahead of the agency’s 2024 Artemis landing by astronauts, who will be sent to the lunar south pole. But landing on the moon will be somewhat different than landing on Mars.

First, lunar missions face a photography challenge. Mars 2020’s rich maps are courtesy of the Mars Reconnaissance Orbiter. Because it flies a sun-synchronous orbit, the MRO is continuously overhead at about 3 p.m. local time, meaning it’s catching the same shadows on every pass. Sharp, consistent shadows are important when you’re trying to map light-and-dark landmarks a TRN system can find. Mars 2020 is conveniently scheduled to land at 3 p.m., so what its camera sees should easily match its maps. (As Chen puts it: “It’s always kind of 3 p.m. on Mars to me.”)

The moon has the Lunar Reconnaissance Orbiter, but that spacecraft is on a polar orbit, and traces a new longitude line on each pass. “That point in time and view angle will almost certainly not correspond to what our lander will see,” says Horchler. “This is even more a factor at the poles of the moon, where the shadows are very dynamic, and even small features cast very long shadows.”

This variability affects the way private companies headed to the moon make their maps for TRN. Astrobotic and its competitor Draper, a fellow CLPS participant based in Cambridge, Massachusetts, are creating synthetic landing images, modeling what the surface will look like at their intended landing times and spots. They build computerized terrain models, then light them based on factors like where the craft will be and how they expect the moon and sun to be aligned.

Another difference: On Mars, the lander comes down on a parachute, following a steep trajectory and using the atmosphere as a brake. But landers can’t parachute on the airless moon, so the landing trajectory must be more horizontal—and that means a bigger map and more data storage. But Horchler says that with a longer trajectory, they’ll have more time to run TRN as they descend, which might offer a more precise landing.

While Horchler tips his hat to the close relationship his company has had with NASA, allowing both to benefit from the other’s research, he points out that they have another big difference: Astrobotic’s TRN technology is designed for commercial missions to many destinations. (After their first launch, they hope to fly about one per year.) He believes their sensor will be able to estimate position based on a single image, without any additional information about altitude, orientation, or velocity, which Horchler says makes their interface simpler, and potentially more self-contained and robust—better adapted to business use.

Similarly, Draper, which has been building spacecraft navigation systems since the Apollo missions, wants to keep things simple. Alan Campbell, the company’s space systems program manager, mentions that while their algorithms are likely similar to NASA’s, on the hardware side they are “working diligently to build a smaller, lighter, more efficient system to serve many different kinds of planetary landing missions.”

After all, that’s still the big idea: landing near all kinds of geologically tricky spots. Horchler says that some customers might want to prospect the moon’s poles for ice. Campbell notes that scientists are keen to explore its lava tubes, tunnels that might shield astronauts from radiation.

And the hope for TRN is that, if your camera and algorithm work on one heavenly body, they will work just about anywhere. “We’re really agnostic to the surface that we’re landing on,” says Campbell, including ones that are super far away. Draper has done work for the OSIRIS-REx sample collection mission to the asteroid Bennu, and Campbell thinks TRN might be useful for NASA’s Dragonfly program to send a rotorcraft to Titan. Horchler points out that their sensor could be adapted for other moons: Phobos and Deimos, the satellites of Mars.

“You can’t land on Europa without it,” says Johnson. Europa has an ocean covered by a giant ice cap, and is a promising place to hunt for life—but there’s no detailed imagery of its surface. NASA plans to send the Europa Clipper orbiter to take photos, but a lander would have to be designed and launched before Clipper even arrives. “We don’t have the luxury like we do at Mars of picking the landing site beforehand, mapping it out as much as we possibly can,” Johnson says.

JPL’s Vision Compute Element will perform a second job after the Mars landing: helping the rover drive. Mohan says its forebear, Curiosity, was capable of higher speeds, but poked along at about 8 meters per hour during autonomous driving because it couldn’t process terrain images while moving. The rover, she says, had to constantly “stop, think, take the next step.” These functions work in parallel on their new computer, which they think will boost speeds for fully autonomous driving to 60 to 80 meters per hour. This too means getting to the juicy spots faster.

But, suggests Johnson, “Wouldn’t it be cool if you didn’t have to have a rover?” What if TRN permitted such pinpoint landings that you could just send an instrument, like a drill, that works where it lands? Or, asks Mohan, how about making life easier for future human crews? Dropping their supplies close together will help them set up their habitats. Instead of scattering payloads over a huge ellipse, she says, “you’re hitting the bulls-eye every single time.”

And this, TRN developers say, is the promise of being able to park in a tight spot. Once you can safely put people and their machines near dead lakes or polar ice or anything that was once terra incognita, the real adventure begins.

Thanks to its novelty, it’s easy to forget that TRN is being deployed in service of one of humanity’s oldest questions: Are we alone?

It’s also one of our hardest. “If life existed on Mars, it was likely microbial and may not have advanced beyond that,” says Stack Morgan. It’s probably very, very small, and very, very dead. And, for now, scientists have to study it from very, very far away. Only the second mission of Mars 2020 is a sample return mission. The rover will cache rocks to be collected by a future robot, yet unborn. Until then, scientists must rely on photos plus electronic data from instruments mounted on the rover arm that can detect organics like carbon.

So how do you find microbes from 249 million miles away? Scientists will be looking for biosignatures, rock textures, and chemical patterns that could have formed only in the presence of life.

Take, for example, a fossil formation called a stromatolite. Stack Morgan picks up a pen. Imagine a lakebed, she says, drawing a bumpy line. If Jezero was lifeless, carbon particles falling through the water column would roll off the lakebed’s peaks and cluster in its troughs. (She heavily dots the low points and places only a few on the high ones.) Once the lake dried up, the resulting stromatolite would show uneven ripples of carbon: thinner at the top and thicker at the bottom.

Now imagine the lakebed was covered by a jelly-like mat of microbes. Carbon would have been equally likely to stick anywhere, she continues, dotting her pen all over. This time, the carbon ripples would be consistently thick. “I can explain that with physics,” she says, pointing to her first drawing. Then she aims her pen at the second. “I can only explain that with life.”