This Camera Can See Around Corners

How a superfast, supersensitive camera could shake up automotive and exploration industries, as well as photography as we know it

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/64/b9/64b9f978-1186-4691-a3a0-0812d5cf14f1/fog_chamber2.jpg)

Self-driving cars, and even cars using lane assist or other supplements, rely heavily on computer vision and LIDAR to read and make sense of what’s around them. They’re already better at it than humans, but there’s another step, coming soon, that could make them a lot safer still: What if those cars could see around corners?

“Saying that your car can not only see what’s in front of it, but can also see what’s behind a corner, and therefore is intrinsically way safer than any human-driven car, could be extremely important,” says Daniele Faccio, a professor of physics at Heriot-Watt University in Edinburgh, Scotland.

Separate but complementary research coming out of the University of Wisconsin, MIT and Heriot-Watt is tackling this problem and making big strides. It’s largely focused on superfast, supersensitive cameras that read the rebounds of scattered laser light, and reconstruct that into an image sort of the way LIDAR, radar and sonar work.

This technology is useful in applications far beyond autonomous vehicles. That wasn’t even the primary motivation when Andreas Velten started studying femtosecond (one quadrillionth of a second) lasers at the University of New Mexico, and then their application in imaging at MIT. Now a professor and assistant scientist at the University of Wisconsin, Velten and his lab have developed and patented a camera that can reconstruct a 3D image of an object that is situated around a corner.

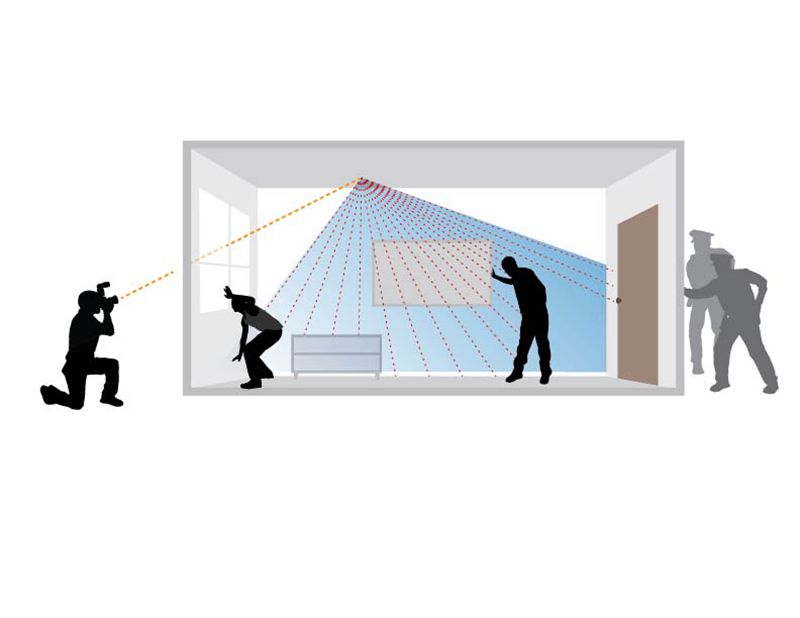

To make sense of the object, to see it at all, requires a camera that can track the passage of light. A laser, situated on or near the camera, fires short bursts of light. Each time those packets hit something—say, a wall on the other side of the corner—the photons that make up the light scatter in every direction. If enough of them bounce in enough different directions, some will make it back to the camera, having bounced at least three times.

“It’s very similar to the data that LIDAR would collect, except that LIDAR would cue up the first bounce that comes from the direct surface and make a 3D image of that. We care about the higher order bounce that comes after that,” says Velten. “Each bounce, the photons split up. Each photon carries a unique bit of information about the scene.”

Because the light bounces off various surfaces at various times, the camera must be equipped to tell the difference. It does so by recording the exact time at which the photon hits a receptor and calculating the paths that photon could have taken. Do this for many photons, and a number of different angles of the laser, and you get a picture.

The technique also requires a sensor called a single-photon avalanche diode, built on a silicon chip. The SPAD, as it’s called, can register tiny amounts of light (single photons) at a trillion frames per second—that’s fast enough to see light move.

“They work like Geiger counters for photons,” says Velten. “Whenever a photon hits a pixel on the detector, it will send out an impulse and that is registered by the computer. They have to be fast enough so they can count each photon individually.”

Faccio’s lab is taking a bit of a different approach, using some of the same technology. Where Velten’s latest has been able to show a 3D image at a resolution of about 10 centimeters (and a decrease in size and cost over previous generations), Faccio has focused on tracking motion. He too uses a SPAD sensor, but keeps the laser stationary and records less data, so he can do it faster. He gets movement, but can’t tell much about the shape.

“The ideal thing would be to have both combined together, that would be fantastic. I’m not sure how to do that right now,” says Faccio. Both also need to work on using lower power, eye-safe lasers. “The real objective is, can you see real people at 50 meters away. That’s when the thing starts to become useful.”

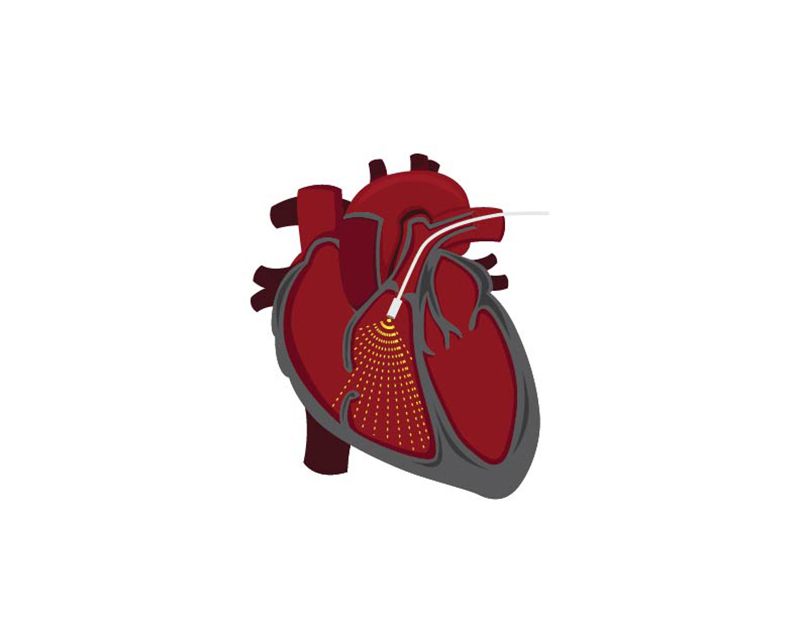

Other potential uses include remote exploration, especially of hazardous areas—for example, to see occupants inside a building during a house fire. There’s military interest too, says Faccio; being able to evaluate the interior of a building before entering has obvious benefits. Velten’s lab is working on applying the technology to see through fog (which scatters photons as well), or through skin (which also scatters), as a non-invasive medical diagnostic tool. He’s even speaking with NASA about imaging caves on the moon.

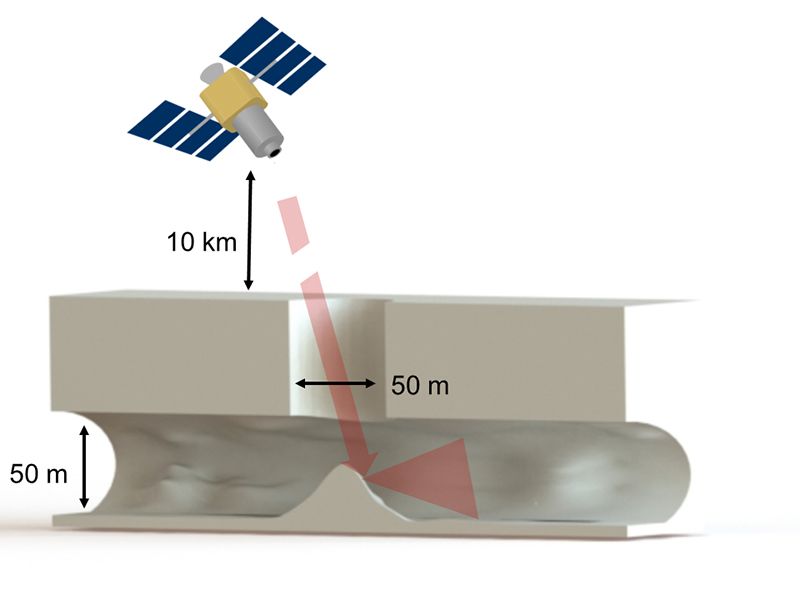

In conjunction with NASA’s Jet Propulsion Lab, the Velten lab is developing a proposal to place a satellite, containing a high-powered version of the device, in orbit around the moon. As it passes certain craters, it’ll be able to tell if they extend laterally, into the interior of the moon; such caves could provide good shelter, one day, for lunar bases, says Velten.