Here’s What the Future of Haptic Technology Looks (Or Rather, Feels) Like

Bringing the sense of touch to virtual reality experiences could impact everything from physical rehabilitation to online shopping

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/68/2b/682b423b-dcab-4d2f-865a-1116b3ee5010/haptic_screengrab.jpg)

In Steven Spielberg’s 2018 film Ready Player One, based on the 2011 book by Ernest Cline, people enter an immersive world of virtual reality called the OASIS. What was most gripping about the futuristic tech in this sci-fi movie was not the VR goggles, which don’t seem so far off from the headsets currently sold by Oculus, HTC and others. It was the engagement of a sense beyond sight and sound: touch.

Characters wore gloves with feedback that let them feel the imaginary objects in their hands. They could upgrade to full body suits that reproduced the force of a punch to the chest or the stroking of a caress. And yet these capabilities, too, might not be as far off as we imagine.

We rely on touch — or “haptic” — information continuously, in ways we don’t even consciously recognize. Nerves in our skin, joints, muscles and organs tell us how our bodies are positioned, how tightly we’re holding something, what the weather is like, or that a loved one is showing affection through a hug. Around the world, engineers are now working to recreate realistic touch sensations, for video games and more. Engaging touch in human-computer interactions would enhance robotic control, physical rehabilitation, education, navigation, communication and even online shopping.

“In the past, haptics has been good at making things noticeable, with vibration in your phone or the rumble packs in gaming controllers,” says Heather Culbertson, a computer scientist at the University of Southern California. “But now there’s been a shift toward making things that feel more natural, that more mimic the feel of natural materials and natural interactions.”

The future is not just bright, but textured.

* * *

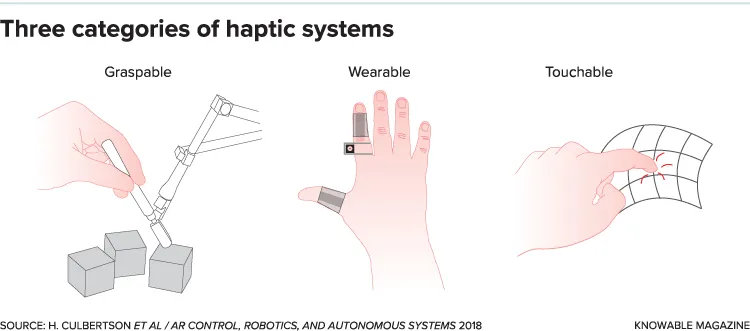

Haptic devices can be grouped into three main types: graspable, wearable and touchable. For graspable, think joysticks. One clear application is in the operation of robots, so that an operator can feel how much resistance the robot is pushing against.

Take surgical robots, which allow doctors to operate from the other side of the world, or to manipulate tools too small or in spaces too tight for their hands. Numerous studies have shown that adding haptic feedback to the control of these robots increases accuracy and reduces tissue damage and operation time. Ones with haptic feedback also allow doctors to train on patients that exist only in virtual reality while getting the feeling of actual cutting and suturing. One of Culbertson’s students is currently developing dental simulators so that a dental student’s first mistaken drilling is not on a real tooth.

Getting a feel for what the robot under your command is doing would also be helpful for defusing bombs or extracting people from collapsed buildings. Or for repairing a satellite without suiting up for a spacewalk. Even Disney has looked into haptic telepresence robots, for safe human-robot interactions. They developed a system that has pneumatic tubes connecting a humanoid’s robotic arms with a mirror set of arms for a human to grasp. The person can manipulate the mirror bot to cause the first bot to hold a balloon, pick up an egg or pat a child on the cheeks.

On a smaller scale, the lab of roboticist Jamie Paik at the Swiss Federal Institute of Technology in Lausanne (EPFL) has developed a portable haptic interface called Foldaway. Devices about the size and shape of a square drink coaster have three hinged arms that pop up, meeting in the middle. (Stefano Mintchev, a postdoc in the lab, calls them “miniaturized origami robots.”) A small plastic handle can be stuck on top where the arms meet, creating a joystick that acts in three dimensions — and the arms push back, to give the user a sense of the objects they’re pushing against. In demos, the team has used the devices to control an aerial drone, squeeze virtual objects and feel the shape of virtual human anatomy.

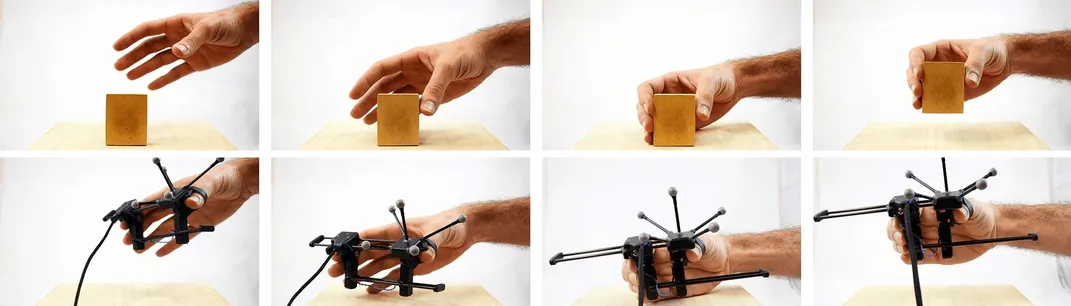

There are certain challenges in grasping haptics that might seem insurmountable — for instance, how do you provide a sense of weight when grabbing and lifting weightless digital objects? But by studying neuroscience, engineers have managed to find a few workarounds. Culbertson and colleagues developed a device called Grabity for the gravity problem. It’s a kind of vise that one grips and squeezes to pick up virtual objects. Simply by vibrating in certain ways, it can produce the illusion of weight and inertia.

But “fooling the brain only goes so far,” says Ed Colgate, a mechanical engineer at Northwestern University who works in haptics. It’s sometimes easy to break haptic illusions. To his mind, in the long run engineers will need to recreate the physics of the real world — weight and all — as faithfully as possible. “That’s a really hard problem.”

Graspable devices often take advantage of kinesthetic sensations: feelings of movement, position and force mediated by nerves not just in our skin but also in our muscles, tendons and joints. Wearable devices, on the other hand, usually rely on tactile sensations — pressure, friction or temperature — mediated by nerves in the skin.

A variety of experimental devices are worn on the finger, pressing against the finger pad with different degrees of force as one touches objects in virtual reality. But a recent device provides the same sort of feedback without covering the finger pad. Instead, it’s worn where one might wear a ring and contains motors that stretch the skin underneath. That keeps the fingers free to interact with real-world objects while still sensing “virtual” ones — a useful feature for both games and serious applications.

In one test, a person could hold a real piece of chalk and feel pressure as they “wrote” on a virtual chalkboard by virtue of a haptic illusion: As they simultaneously saw the chalk contact the board and felt their skin stretched, they were fooled into feeling pressure in their fingertips.

More commonly, wearable haptic devices communicate through vibration. Culbertson’s lab, for example, is working on a wristband that guides the wearer by vibrating in the direction he or she needs to turn. And NeoSensory, a company founded by Stanford neuroscientist David Eagleman, is developing a vest with 32 vibratory motors that was showcased in an episode of HBO’s sci-fi series Westworld where it ostensibly helped characters identify the direction of approaching enemies.

One of the vest’s first real applications will be to translate sound into tactile sensation to make spoken language more intelligible to people with profound or complete hearing loss. Eagleman is also working on translating aspects of the visual world into vibrations for people who are blind. Other efforts involve more abstract information such as market and environmental data — instead of a grid indicating where things are spatially, a complex pattern of vibrations might indicate the prices of a dozen stocks.

Vibrating motors can be bulky, so some labs are developing more comfortable solutions. Paik’s lab at EPFL is working on a soft pneumatic actuator (SPA) skin — a sheet of flexible silicone less than 2 millimeters thick that’s dotted with tiny air pockets. They can be inflated and deflated independently dozens of times per second and thereby act as pixels — or “taxels,” for tactile elements — creating a grid of sensation. They might provide feelings of the kind the suits offer in Ready Player One, or feedback on the positioning of robots or prosthetic limbs. SPA skin is also embedded with sensors made of a new, corrosion-resistant metal alloy that allows the same skin to be used for computer input when the user squeezes it.

An even thinner haptic film — less than half a millimeter thick — is also in the offing, created by Novasentis and made of a new form of polyvinylidene fluoride plastic that balances strength, flexibility and electrical responsiveness. When the film is layered on one side of a sheet of flexible material and an electrical charge is applied, the film contracts and flexes the sheet, applying pressure against the skin. Novasentis is now providing the material to device manufacturers who are putting it into gloves for virtual reality and gaming.

“You can distinguish between water and sand and rock,” says Sri Peruvemba, the company’s vice president of marketing. VR designers could also create more abstract representations, such as sensation-delivered messages about the state of a game. “We can create a whole haptic language with our technology,” Peruvemba says.

Vibrations can produce another kind of haptic illusion: the sensation of pulling. If a device that vibrates back and forth parallel to the skin’s surface moves quickly in one direction and slowly back the other way, many times a second, it feels as if it’s tugging the skin in the first direction.

While most wearables use tactile sensation, they can also use the muscle-joint-tendon input of kinesthetic sensation. Engineers have developed robotic exoskeletons, a kind of scaffolding strapped to the body with sensors and motors, that can help paralyzed people walk, give soldiers super strength, and let people control robots at a distance. A lab at EPFL has developed the FlyJacket, which one wears with arms straight out to the sides, connected by pistons to the waist. It doesn’t look especially fly, but it allows people to control the flight of aerial drones by moving their arms and twisting their torsos. When the drone feels a gust of wind, you do too.

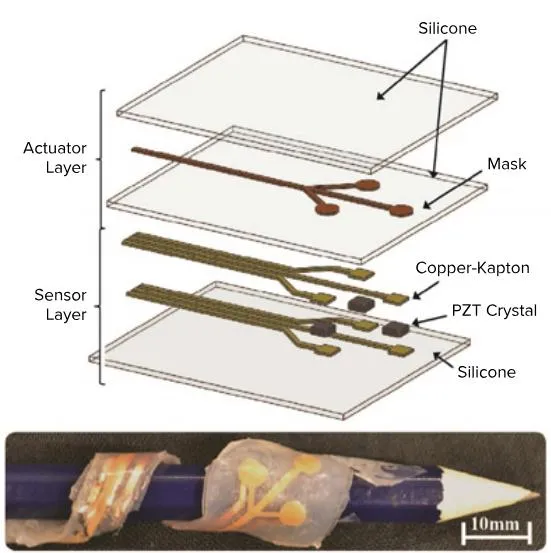

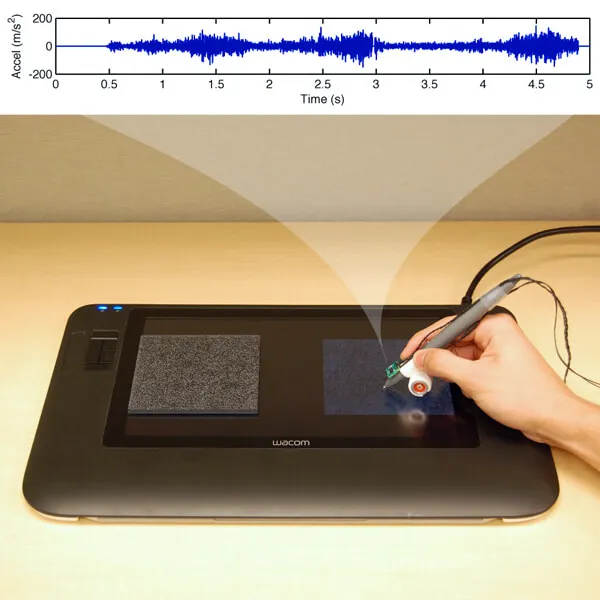

The final category of devices are touchable interfaces, such as smartphone screens that give a little bump when you click on an app. Culbertson’s work pushes beyond simple bumps and buzzes. She simulates texture on surfaces using what she calls “data-driven haptics.” Instead of writing complicated algorithms or physics models to generate vibrations that simulate real ones, she records what happens when something is dragged over different fabrics or other materials at different speeds and pressures. Then she has a surface play back the vibrations when a pen is dragged across it. Applications could include online shopping and virtual museums.

Touchable surfaces also allow types of illusions. For instance, Culbertson says, playing the sound of a button clicking as one taps a picture of a button makes it feel as if the button is actually clicking. Or making the screen appear to deform under one’s finger can make it feel softer. People construct perception by tying together sight, sound, touch, taste and smell — and, as Culbertson says, “It’s really easy to fool your brain if you have a mismatch between your senses.”

Realistic haptics for VR may forever be clunky and expensive. Or technology may eventually make Ready Player One look quaint. In either case, as we can see with baby steps such as the pervasive rumbling of video game controllers and endlessly vibrating phones and watches, haptic devices are here to stay, adding a new dimension to our digital lives.