How Is Brain Surgery Like Flying? Put On a Headset to Find Out

A device made for gaming helps brain surgeons plan and execute delicate surgeries with extreme precision

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/0c/30/0c300b77-4bf4-46a3-94c5-7c7fc3ba6043/surgeons.jpg)

Osamah Choudhry looked up and saw a tumor.

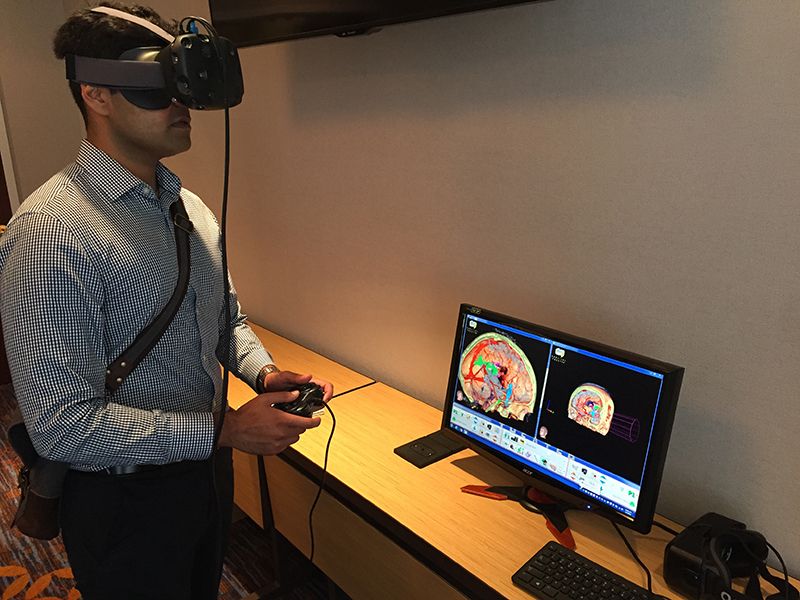

Walking gingerly around a conference room in a hotel near New York University’s Langone Medical Center, the fourth-year neurosurgery resident tilted his head back. It wasn’t ceiling tiles he was examining. Rather, peering into a bulky black headset strapped to his head, he slowly explored a virtual space. A computer screen on a nearby table displayed his view for onlookers: a colorful and strikingly lifelike representation of a human brain.

Taking small steps and using a game controller to zoom, rotate and angle his perspective, Choudhry flew an onscreen avatar around the recreated brain like a character in some bizarre Fantastic Voyage-inspired game. After two or three minutes of quiet study, he finally spoke.

“Wow.” Then, more silence.

Choudhry is no stranger to the impressive tech tools used in surgery. GPS-based navigation pointers, for tracking the location of surgical instruments in relation to the anatomy, and 3D printed models are common aids for neurosurgeons. But the device Choudhry was looking into for the first time on this day, a HTC Vive virtual reality headset, was next-level. It put him inside a real patient’s head.

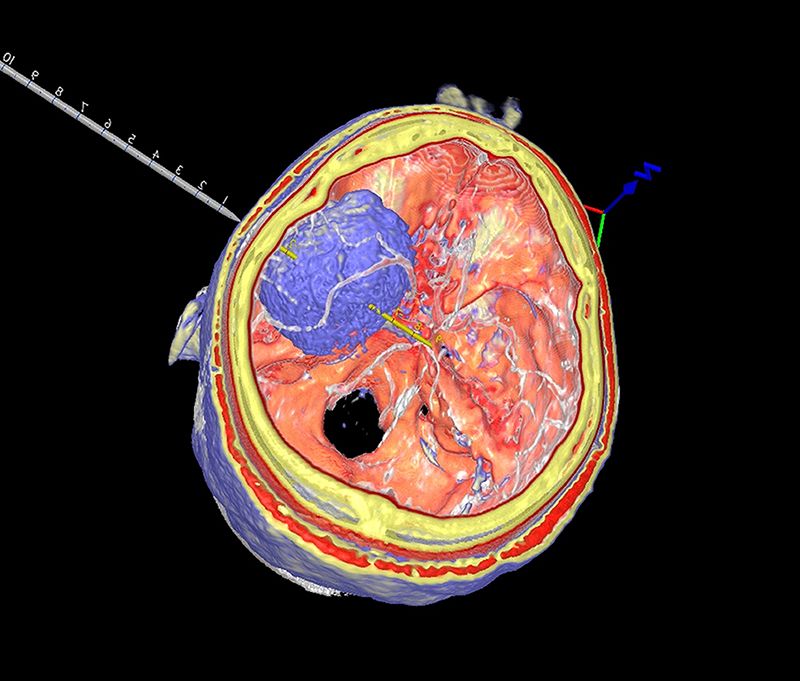

Here, he could not only see all sides of the lurking insular glioma, zooming in to scrutinize fine detail and flying out to see the broader context, but also how every nerve and blood vessel fed into and through the tumor. Critical motor and speech areas nearby, flagged in blue, signal no-fly zones to carefully avoid during surgery. The skull itself featured a wide cutout that can be shrunken down to the size of an actual craniotomy, a dime- or quarter-sized opening in the skull through which surgeons conduct procedures.

“This is just beautiful,” Choudhry said. “In medicine, we’ve been stuck for so long in a 2D world, but that’s what we rely on, looking at the slices of CT and MRI scans. This technology makes the MRI look positively B.C., and allows us to look at the anatomy in all three dimensions.”

Computerized tomography (CT) and magnetic resonance imaging (MRI) scans are critical elements for exploring how the interior of the body looks, locating disease and abnormalities, and planning surgeries. Until now, surgeons have had to create their own mental models of patients through careful study of these scans. The Surgical Navigation Advanced Platform, or SNAP, however, gives surgeons a complete three-dimensional reference of their patient.

Developed by the Cleveland, Ohio-based company Surgical Theater, SNAP is designed for the HTC Vive and the Oculus Rift, two gaming headsets that are still not yet available to the public. The system was initially conceived as a high-fidelity surgical planning tool, but a handful of hospitals are testing how it could be used during active surgeries.

In essence, SNAP is a super-detailed roadmap that surgeons can reference to stay on track. Surgeons already use live video feeds of procedures underway to have a magnified image to refer to; 3D models on computer screens have also improved visualization for doctors. The headset adds one more layer of immersive detail.

Putting on the headset currently requires a surgeon to step away from the procedure and don new gloves. But, in doing so, the doctor orients to a surgical target, in detail, and can return to the patient with a clear understanding of next steps and any obstacles. Diseased brain tissue can look and feel very similar to healthy tissue. With SNAP, surgeons can accurately measure distances and widths of anatomical structures, making it easier to know exactly what parts to remove and what parts to leave behind. In brain surgery, fractions of millimeters matter.

The tool had an unlikely origin. While in Cleveland working on a new U.S. Air Force flight simulation system, former Israeli Air Force pilots Moty Avisar and Alon Geri were ordering cappuccinos at a coffee shop when Warren Selman, the chair of neurosurgery at Case Western University, happened to overhear some of their conversation. One thing led to another, and Selman asked if they could do for surgeons what they did for pilots: give them an enemy-eye view of a target.

“He asked us if we could allow surgeons to fly inside the brain, to go inside the tumor to see how to maneuver tools to remove it while preserving blood vessels and nerves,” Avisar said. Geri and Avisar co-founded Surgical Theater to build the new technology, first as interactive 3D modeling on a 2D screen, and now, with a headset.

The SNAP software takes CT and MRI scans and merges them into a complete image of a patient’s brain. Using the handheld controls, surgeons can stand next to or even inside the tumor or aneurysm, make brain tissue more or less opaque and plan out the optimal placement of the craniotomy and subsequent moves. The software can build a virtual model of a vascular system in as little as five minutes; more complicated structures, like tumors, can take up to 20.

“Surgeons want to be able stop for a few minutes during surgery and look at where they are in the brain,” Avisar said. “They’re operating through a dime-sized opening, and it’s easy to lose orientation looking through the microscope. What you can’t see is what’s dangerous. This gives them a peek behind the tumor, behind the aneurysm, behind the pathology.”

John Golfinos, chair of neurosurgery at NYU’s Langone Medical Center, said that SNAP’s realistic visual representation of a patient is a major leap forward.

“It’s pretty overwhelming the first time you see it as a neurosurgeon,” he said. “You say to yourself, where has this been my whole life?”

Golfinos’ enthusiasm is understandable when you comprehend the mental gymnastics required of surgeons to make sense of standard medical imaging. In the 1970s, when CT was developed, images were initially represented like any photograph: the patient’s right side was on the viewer’s left, and vice versa. Scans could be taken in three planes: from bottom to top, left to right, or front to back. But then, somehow, things got mixed up. Left became left, top became bottom. That practice carried through to MRI scans, so for surgeons to read scans as if they were patients standing in front of them, they needed to be able to mentally rearrange images in their minds.

“Now people are finally realizing that if we’re going to simulate the patient, we should simulate them the way the surgeon sees them,” Golfinos said. “I tell my residents that the MRI never lies. It’s just that we don’t know what we’re looking at sometimes.”

At UCLA, SNAP is being used in research studies to plan surgeries and assess a procedure’s effectiveness afterward. Neurosurgery chair Neil Martin has been providing feedback to Surgical Theater to help refine the occasionally disorienting experience of looking into a virtual reality headset. Though surgeons are using SNAP during active surgeries in Europe, in the United States it’s still used as a planning and research tool.

Martin said he hopes that will change, and both he and Avisar think it could take collaboration on surgeries to an international level. Connected through a network, a team of surgeons from around the world could consult on a case remotely, each with a uniquely colored avatar, and walk through a patient’s brain together. Think World of Warcraft, but with more doctors and fewer archmagi.

“We’re not talking telestrations on a computer screen, we’re talking about being inside the skull right next to a tumor that’s 12 feet across. You can mark the areas of the tumor that should be removed, or use a virtual instrument to section away the tumor and leave the blood vessel behind,” Martin said. “But to really understand what it has to offer, you have to put the headset on. Once you do, you’re immediately transported into another world.”

At NYU, Golfinos has used SNAP to explore ways he could approach tricky procedures. In one case, where he thought an endoscopic tool might be the best method, SNAP helped him see that it wasn’t as risky as he thought.

“Being able to see all the way along the trajectory of endoscope just isn’t possible on a 2D image,” Golfinos said. “But in 3D, you are able to see that you’re not going to be bumping into things along the way or injuring structures nearby. We used it on this case to see if it was possible at all to reach [the tumor] with a rigid endoscope. It was, and we did, and the 3D made the determination on a case that turned out beautifully.”

Patient education is another area where Choudhry thinks the Vive or Oculus Rift could be extremely useful. In an era when many patients do their homework and come armed with questions, Choudhry said it could help facilitate a better connection between patient and surgeon.

“Sometimes I spend minutes explaining the CT or MRI scan, and it doesn’t take long for you to lose them,” Choudhry said. “The 3D is intuitive, and you know exactly what you’re looking at. If the patient is more comfortable with what you’re telling them, then their overall care will be better.”

Martin agrees. While he says about a third of patients just don’t care to see the gritty details, many are eager to know more.

“We can show them what their tumor looks like, and they can be fully informed about what’s going to happen,” Martin said. “Some people are quite interested in the technical detail, but not everyone wants that level of involvement.”

Ultimately, Choudhry thinks that a technology like SNAP is a gateway into even more advanced uses for digitization in the operating room. A transparent headset, more like lab goggles, would be more nimble, he said, and allow for augmented reality, such as a 3D overlay, on the real patient.

But for now, Golfinos says virtual reality is still a valuable tool, and helps improve care across the field, especially in neurosurgery, where intimate knowledge of anatomy is a necessity.

“We have this technology, and we want it to improve life for everybody,” he said. “It improves safety, and for our patients, that’s the best possible thing we can do.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/Michelle-Donahue.jpg)

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/Michelle-Donahue.jpg)