Stanford Scientists Create an Algorithm That Is the “Shazam” For Earthquakes

The popular song-identifying app has inspired a technique for identifying microquakes in the hopes of predicting major ones

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/f5/15/f515d1c5-0714-4b35-8485-df9c899dd6f1/42-22878812.jpg)

Stanford seismologist Gregory Beroza was out shopping one day when he heard a song he didn’t recognize. So he pulled out his smartphone and used the popular app Shazam to identify the tune.

Shazam uses an algorithm to find the “acoustic fingerprint” for a song—the part of a song that makes it unique—and compares it to its song database.

What if, Beroza wondered, he could use a similar technique to identify earthquakes?

For years, seismologists have been trying to identify “microquakes”—earthquakes so tiny they don’t even register on traditional measurement tools. Identifying microquakes can help scientists understand earthquake behavior, and potentially help them predict dangerous seismic events.

Like songs, earthquakes also have fingerprints.

“The earth’s structure changes very slowly, so earthquakes that happen near each other have very similar waveforms, that is, they shake the ground in almost the same way,” Beroza explains.

Over time, researchers have created databases of earthquake fingerprints in order to identify ground movements that might be microquakes. When a ground movement occurs, seismologists can use the database to see if it matches with any known earthquake fingerprint. But using these databases is a slow process, and seismologists are often trying to read enormous amounts of data in real time.

“You can imagine if you were trying to compare all times with all other times 365 days a year, 24 hours a day, it quickly gets to be a very big job,” Beroza says. “In fact, it gets impossibly big.”

But an algorithm-based microquake fingerprint reader based on Shazam could have the potential to do the job almost instantly, Beroza figured.

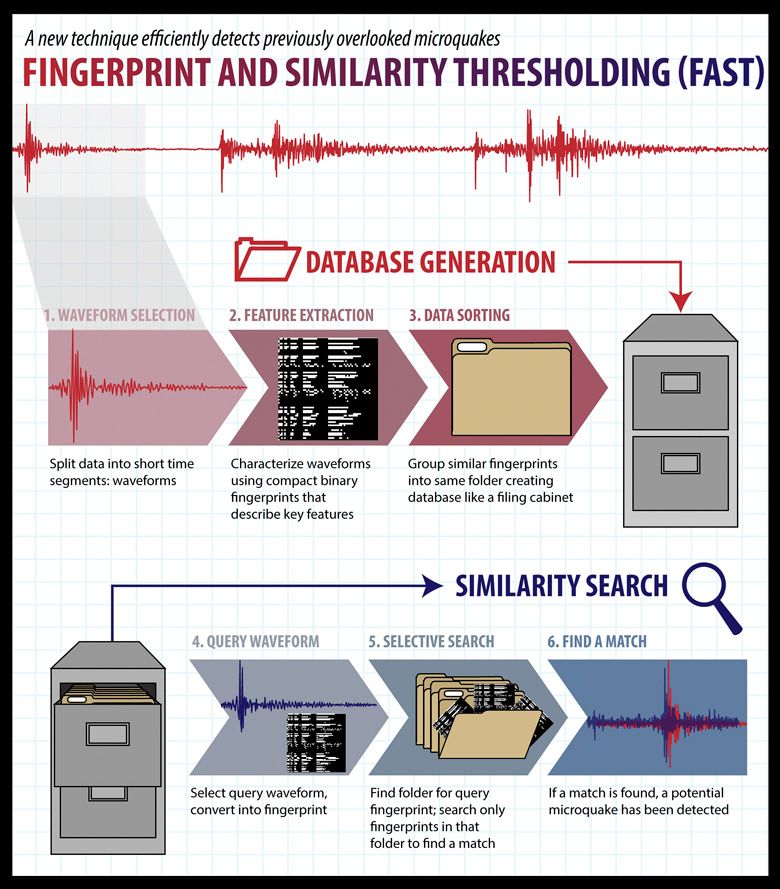

The seismologist recruited three students with expertise in computational geoscience to create an algorithm. Together, the team came up with a program called Fingerprint and Similarity Thresholding (FAST). Its acronym is appropriate: FAST can analyze a week of continuous seismic data in less than two hours, 140 times faster than traditional techniques. Unlike traditional databases, FAST uses fingerprinting to compare “like with like,” cutting out the time-wasting process of comparing all earthquakes with all other earthquakes.

The results of the team’s work were recently published in the journal Science Advances.

“The potential use [of FAST] is really everywhere,” Beroza says. “It might be useful for finding earthquakes during aftershock sequences [the smaller earthquakes that often follow a larger one] to understand the process by which one earthquake leads to another earthquake.”

It also might be useful in understanding “induced seismicity”—small earthquakes caused by human behavior. A common cause of induced seismicity is wastewater injection, where contaminated water from oil and gas drilling is disposed of by injecting it into deep subterranean wells. Wastewater injection is thought to be the cause of the biggest human-induced earthquake in U.S. history, a magnitude 5.7 earthquake in Oklahoma in 2011. Mining, hydraulic fracturing and the building of very large reservoirs are also known to induce earthquakes. Unlike natural earthquakes, whose numbers have remained consistent over the years, human-induced earthquakes are increasing in frequency, Beroza says. FAST might be especially helpful in this area, giving researchers a better picture of just how much human activities are destabilizing the Earth’s crust.

There are still challenges before FAST can be fully implemented. In the team’s research, FAST was only used with a single instrument on a single fault line. To be widely useful, it must be networked over a series of seismic sensors. It also needs to be even faster, Beroza says. The team is currently working on these improvements, and Beroza expects to release more results within the year.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/matchar.png)

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/matchar.png)