This New System Can See Through Fog Far Better Than Humans

Developed by MIT researchers, the technology could be a boon for drivers and driverless cars

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/f0/f6/f0f6a62d-aebe-4ae7-b7fc-1262869c4bdb/mit-seeing-through-fog-01-press.jpg)

Five years ago, my husband and I spent the summer in Scotland. When we weren’t working, we would drive through the Highlands on hiking and sightseeing trips. The thing I remember most is the fog. Cinematic, enveloping white clouds, coming seemingly out of nowhere, making the boulder-strewn hills and craggy valleys disappear completely. Oh, and did I mention many of the roads were one-way? If we’d pulled over, we could have been stuck for hours. So instead we’d inch along, squinting for the yellow glow of oncoming headlights through the fog.

If only we’d had a new imaging system developed by researchers at MIT, designed to see through fog and warn drivers of obstacles.

“We want to see through the fog as if the fog was not there,” says Guy Satat, a PhD candidate at MIT Media Lab who led the research.

The system uses ultrafast measurements and an algorithm to computationally remove fog and create a depth map of the objects in the vicinity. It uses a SPAD (single photon avalanche diode) camera that shoots pulses of laser light and measures how long it takes for the reflections to return. In clear conditions, this time measurement could be used to gauge object distance. But fog causes light to scatter, making these measurements unreliable. So the team developed a model for measuring how, exactly, fog droplets affect light’s return time. Then the system can eliminate the scattering and create a clear picture of what’s actually ahead.

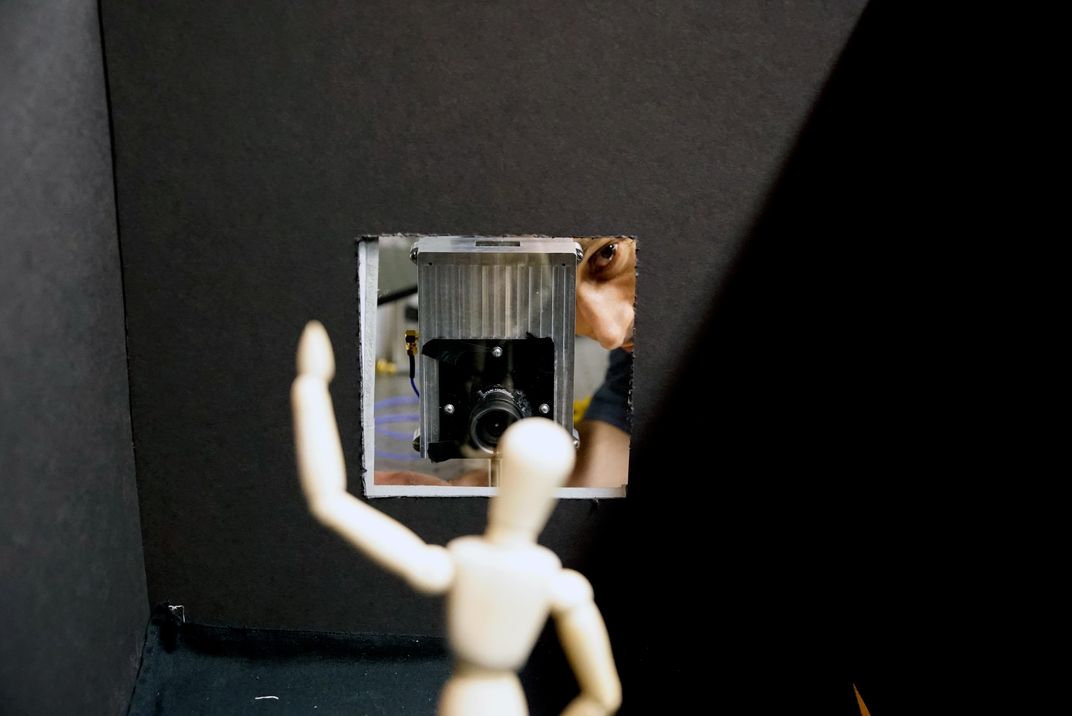

To test the system, the team had to create fake fog. This was easier said that done. They tried the kind of fog machine you can rent for parties, but the result was “way too intense” for their purposes, Satat says. They eventually used a tank of water with a humidifier motor inside to create a fog chamber. They put small objects such as blocks and letter cards inside, to see how far and well the system could see. The results showed the system performed far better than human vision, in conditions far foggier than cars encounter on roads.

Satat and his colleagues will present a paper about their system at the International Conference on Computational Photography at Carnegie Mellon University in May.

Satat says it’s possible the system will work on other conditions such as rain and snow, but they haven’t tested them yet. They’re currently looking at making the system more photon efficient, which could allow it to see through denser fog to farther distances. They hope the system will one day have numerous real-world applications.

“The immediately obvious application is self-driving cars, simply because this industry is already using similar hardware,” Satat says.

Most driverless car systems (though notably not Tesla’s) use LIDAR (light detection and ranging) systems, which shoot pulses of infrared light and measure how long it takes to come back. This is similar to the first part of the MIT team’s system, just without the extra step of subtracting fog photons from the scene. LIDAR systems are currently quite expensive, but are expected to go down in price as they develop. Satat and his team hope to “piggyback” on LIDAR development to one day add their fog feature to cars.

The system could also, obviously, be useful in regular cars, since humans can’t see through fog either. Satat imagines an “augmented driving” system that could remove the fog from your vision.

“You’d see the road in front of you as if there was no fog," he explains, "or the car would create warning messages that there’s an object in front of you.”

The system could also be useful for planes and trains, which are often stymied by fog. It could also potentially be used to see through turbid water.

Oliver Carsten, a professor at the Institute for Transport Studies at the University of Leeds, says he can imagine the MIT technology extending the abilities of current automatic emergency braking (AEB) systems, which use sensors to detect obstacles ahead and cause the car to brake. The system could make AEB more effective in poor weather.

But, Carsten says, the team "will need to demonstrate its reliability in a variety of environmental conditions, not just in the lab, but also in the real world."

Satat and his team are part of the Camera Culture Group at the Media Lab, led by Ramesh Raskar, an expert on computational photography. The group has been working on similar imaging problems for years. Recently, they developed a system using lasers and cameras to see objects around corners. They also created a system that uses terahertz radiation to read through the first nine pages of a closed book. The technology has potential for museums and antique book experts, who may have books or other documents too delicate to touch.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/matchar.png)

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/matchar.png)