Understanding the Mind of the Coder and How It Shapes the World Around Us

Clive Thompson’s new book takes readers deep into the history and culture of computer programming

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/f8/e3/f8e33b08-1e40-4209-a485-c4d10b1d3ab2/gettyimages-524249610.jpg)

A few years ago, as journalist Clive Thompson started working on his new book about the world of coding and coders, he went to see the musical Hamilton. His take-away? The founding fathers were basically modern-day programmers.

“Hamilton, Madison and Jefferson entered “‘The Room Where it Happens’ and Hamilton [came] out having written 20 lines of code that basically said, ‘Washington is going to be this center of power, and there’s going to be the national bank,’” Thompson told me. “They pushed their software update, and completely changed the country.’”

Throughout history, Thompson said, “a professional class has had an enormous amount of power. What the people in that class could do was suddenly incredibly important and incredibly political and pivotal. Society needed their skills badly, and just a few people could make decisions that had an enormous impact.”

In 1789, those people were the lawyers or legalists; in 2019, it’s the coders. “They set down the rules to determine how we're going to do things. If they make it easier to do something, we do tons more of it,” he explained. “If we want to understand how today’s world works, we ought to understand something about coders.”

So Thompson has hacked the mindframe of these all-(too?)-powerful, very human beings. In his new book Coders: The Making of a New Tribe and the Remaking of the World, he lays out the history of programming, highlighting the pioneering role women played. He traces the industry’s evolution to its current, very white and very male state and uncovers what challenges that homogeneity presents. Thompson weaves together interviews with all types of programmers, from ones at Facebook and Instagram whose code impacts hundreds of millions of people each day, to the coders obsessed with protecting data from those very same Big Tech companies. Drawing on his decades of reporting for Smithsonian, WIRED and The New York Times Magazine, he introduces us to the minds behind the lines of code, the people who are shaping and redefining our everyday world.

Coders: The Making of a New Tribe and the Remaking of the World

From acclaimed tech writer Clive Thompson comes a brilliant anthropological reckoning with the most powerful tribe in the world today, computer programmers, in a book that interrogates who they are, how they think, what qualifies as greatness in their world, and what should give us pause.

What personality traits are most common among programmers? What makes a good programmer?

There are the obvious ones, the traits that you might expect—people who are good at coding are usually good at thinking logically and systematically and breaking big problems down into small, solvable steps.

But there are other things that might surprise you. Coding is incredibly, grindingly frustrating. Even the tiniest error—a misplaced bracket—can break things, and the computer often doesn't give you any easy clues as to what's wrong. The people who succeed at coding are the ones who can handle that epic, nonstop, daily frustration. The upside is that when they finally do get things working, the blast of pleasure and joy is unlike anything else they experience in life. They get hooked on it, and it helps them grind through the next hours and days of frustration.

Coding is, in a way, a very artistic enterprise. You're making things, machines, out of words, so it's got craft—anyone who likes building things, or doing crafts, would find the same pleasures in coding. And coders also often seek deep, deep isolation while they work; they have to focus so hard, for so many hours, that they crave tons of “alone time.” Don't dare bother them while they're in the trance or you'll wreck hours of mental palace-building! In that sense, they remind me a lot of poets or novelists, who also prefer to work in long periods of immersive solitude.

But the truth is, coding is also just lots and lots and lots of practice. If you’re willing to put in your 10,000 hours, almost anyone can learn to do it reasonably well. It’s not magic, and they’re not magicians. They just work hard!

Women originally dominated the profession but are now just a sliver of tech companies’ programmers. Why and how did they get pushed out?

For a bunch of reasons. [Early on,] you saw tons of women in coding because [hiring] was based purely on aptitude and merit, being good at logic, and good at reasoning. But, beginning of late 1960s and early ’70s, coding began developing the idea [a coder] ought to be something that is more like a grumpy introverted man. Some of that was just a lot of introverted grumpy men starting flocking to coding.

[At the time,] Corporations [realized] software wasn’t just this little side-thing that may be on their payroll, but it was a huge thing that became central to their organization, e.g. how they made decisions and how they gathered data. The companies went, ‘Well, we’re going to have coders, they need to be potentially able rise up into manager.’ Back then, no one hired women for management.

So, you see a woman who’s potentially really good at coding, but you’re like, ‘I’m sorry, we're not going to make her manager 15 years later,’ so they don't even bother hiring her for coding. Even when you had female coders on staff, when you're crashing on a big project, and everyone's working full time, the women have to go home. There were literally company rules saying that women can’t be on premises after eight o'clock at night, and laws in some states saying if they’re pregnant they had to leave their job.

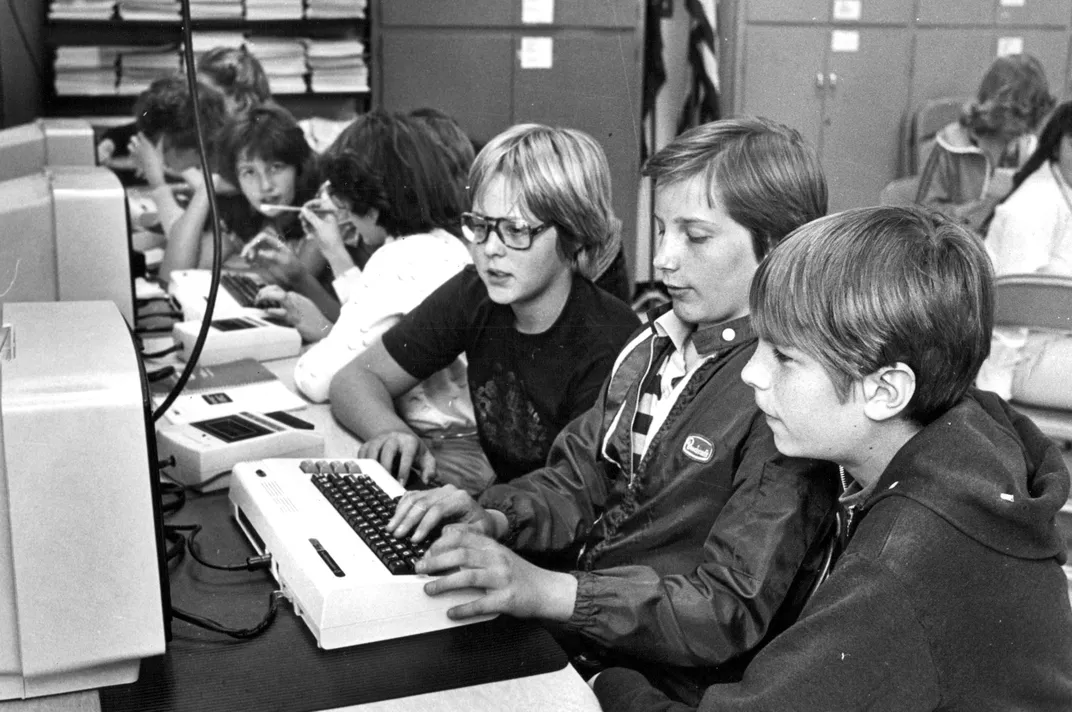

At the same time at the universities, for the first 20 years of computer science degrees, you've seen men and women’s interest going up and up and up. Then in the mid-1980s, something happened. All those kids like me [mostly male] who grew up programming those first computers started arriving on campus. That created a dichotomy in the classroom. In that first year of class, it felt like a bunch of cocky boys who knew how to code already and a bunch of neophytes of men and primarily women who hadn’t done it before. The professors start teaching for the hacker kids. And so, all the women and the men who hadn’t coded before start dropping out. And the classes start becoming all male and also essentially going, ‘Y’know we shouldn't let anyone into this program if they haven't been hacking for four years already.’

There are knock-on effects. The industry becomes very, very male, it starts expecting that it's normal for women not to be there. It isn’t seen as a problem that needs to be fixed and is never challenged at universities and companies. So women would just leave and go do something else with their talents. Really, it's only been in the last decade that academia and companies started to reckon with the fact that culture exists, and is calcified, and needs to dealt with.

It turns out the blanket term “hackers” is a bit of a misnomer.

When the public hears the word “hacker” they usually think of someone who's breaking into computer systems to steal information. If you're hanging out with actual coders, though, they call that a “cracker.”

To coders, the word “hacker” means something much different, and much more complimentary and fun. To them, a “hacker” is anyone who's curious about how a technical system works, and who wants to poke around in it, figure it out, and maybe get it to do something weird and new. They're driven by curiosity. When they say “hacking” they're usually just talking about having done some fun and useful coding—making a little tool to solve a problem, figuring out how to take an existing piece of code and getting it to do something new and useful. When they say something is a good "hack", they mean any solution that solves a problem, even it’s done quickly and messily: The point is, hey, a problem was solved!

Most people outside the tech world know about coders and Silicon Valley from pop culture depictions. What do these representations miss? What do they get right?

Traditionally, most characterizations of coders in movies and TV were terrible. Usually they showed them doing stuff that’s essentially impossible—like hacking into the Pentagon or the air-traffic controls system with a few keystrokes. And they almost alwaysfocused on the dark-side idea of “hacking,” i.e. breaking into remote systems. I understand why; it made for good drama!

But what real programmers do all day long isn't anywhere near so dramatic. Indeed, a lot of time they're not writing code at all: They're staring at the screen, trying to figure out what's wrong in their code. Coders on TV and the big screen are constantly typing, their fingers in a blur, the code pouring out of them. In the real world, they're just sitting there thinkingmost of the time. Hollywood has never been good at capturing the actual work of coding, which is enduring constant frustration as you try to make a busted piece of code finally work.

That said, there have recently been some better depictions of coders! “Silicon Valley” is a comedy that parodied the smug excesses of tech, so they did a fun job of skewering all the gauzy rhetoric from tech founders and venture capitalists about how their tech was going to “make the world a better place.” But they often captured coder psychology really well. The coders would often get weirdly obsessed with optimizing seemingly silly things, and that's exactlyhow real-life coders think. And they'd do their best work in long, epic, isolated, into-the-night jags—also very realistic.

Meanwhile, “Mr. Robot” does a great job of showing what real hacking looks like—if there was a piece of code on-screen, it often actually worked! “Halt and Catch Fire” was another good one, showing how a super-talented coder could simultaneously be amazing at writing code but terrible at imaging a useful product that regular people would want to use. That’s very realistic.

Why do you think coders didn’t foresee how platforms like Twitter and Facebook could be manipulated by bad actors?

They were naive, for a bunch of reasons. One is that they were mostly younger white guys who'd had little personal experience of the sorts of harassment that women or people of color routinely face online. So for them, creating a tool that makes it easier for people to post things online, to talk to each other online—what could go wrong with that? And to be fair, they were indeed correct: Society has benefited enormouslyfrom the communication tools that they created, at Facebook or Twitter or Instagram or Reddit or anywhere else. But because they hadn’t war-gamed the ways that miscreants and trolls could use their systems to harass people, they didn’t—early on—put in many useful safeguards to prevent it, or even to spot it going on.

The financial models for all these services were “make it free, grow quickly, get millions of users, and then sell ads.” That's a great way to grow quickly, but it also means they put in place algorithms to sift through the posts and find the “hot” ones to promote. That, in turn, meant they wound up mostly boosting the posts that triggered hot-button emotions—things that triggered partisan outrage, or anger, or hilarity. Any system that's sifting through billions of posts a day looking for the fast-rising ones is, no surprise, going to ignore dull-and-measured posts and settle on extreme ones.

And of course, that makes those systems easy to game. When Russian-affiliated agents wanted to interfere with the 2016 election, they realized all they had to do was post things on Facebook that pretended to be Americans taking extreme and polarizing positions on political issues—and those things would get shared and promoted and upvoted in the algorithms. It worked.

Americans are still coming to terms with the role these Big Tech companies play in our politics. How is that reckoning playing out among their employees?

You’re seeing more ethical reflections amongst more employees. I’ve heard tales of Facebook employees who are now a bit embarrassed to admit where they work when they’re at parties. That’s new; it wasn't so long ago that people would boast about it. And you're also seeing some fascinating labor uprisings. Google and Microsoft have recently had everything from staff petitions to staff walkouts when tech employees decided they didn't like their companies’ work for the military or [immigration enforcement]. That’s also very new and likely to grow. The tech firms are desperate to hire and retain tech staff—if their employees grow restive, it’s an Achilles heel.

Your book is chock-full of great anecdotes and stories. Is there one in particular you think is the most illuminating about the tech industry and coders?

One of my favorites is about the “Like” button on Facebook. The coders and designers who invented it originally hoped it would unlock positivity on the platform—by making it one-button-click easy to show you liked something. It was a classic efficiency ploy, the sort of way coders look at the world. And it worked! It really did unlock a ton of positivity.

But it quickly created weird, unexpected, and sometimes bad side effects. People started obsessingover their Likes: Why isn’t my photo getting more likes? Should I post a different one? Should I say something more extreme or more angry to get attention? A half-decade later, the folks who invented “Like” had much more complex thoughts about what they’d created. Some of them have stepped away from using social media much at all.

It’s a great story, because it shows how powerful even a small piece of code can be—and also how it can have side-effects that even its creators can’t foresee.

A Note to our Readers

Smithsonian magazine participates in affiliate link advertising programs. If you purchase an item through these links, we receive a commission.