Will A.I. Ever Be Smarter Than a Four-Year-Old?

Looking at how children process information may give programmers useful hints about directions for computer learning

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/f0/59/f059d6e4-4270-4110-9c55-859117179e8a/preschoolers.jpg)

Everyone’s heard about the new advances in artificial intelligence, and especially machine learning. You’ve also heard utopian or apocalyptic predictions about what those advances mean. They have been taken to presage either immortality or the end of the world, and a lot has been written about both of those possibilities. But the most sophisticated AIs are still far from being able to solve problems that human four-year-olds accomplish with ease. In spite of the impressive name, artificial intelligence largely consists of techniques to detect statistical patterns in large data sets. There is much more to human learning.

How can we possibly know so much about the world around us? We learn an enormous amount even when we are small children; four-year-olds already know about plants and animals and machines; desires, beliefs, and emotions; even dinosaurs and spaceships.

Science has extended our knowledge about the world to the unimaginably large and the infinitesimally small, to the edge of the universe and the beginning of time. And we use that knowledge to make new classifications and predictions, imagine new possibilities, and make new things happen in the world. But all that reaches any of us from the world is a stream of photons hitting our retinas and disturbances of air at our eardrums. How do we learn so much about the world when the evidence we have is so limited? And how do we do all this with the few pounds of grey goo that sits behind our eyes?

The best answer so far is that our brains perform computations on the concrete, particular, messy data arriving at our senses, and those computations yield accurate representations of the world. The representations seem to be structured, abstract, and hierarchical; they include the perception of three-dimensional objects, the grammars that underlie language, and mental capacities like “theory of mind,” which lets us understand what other people think. Those representations allow us to make a wide range of new predictions and imagine many new possibilities in a distinctively creative human way.

This kind of learning isn’t the only kind of intelligence, but it’s a particularly important one for human beings. And it’s the kind of intelligence that is a specialty of young children. Although children are dramatically bad at planning and decision making, they are the best learners in the universe. Much of the process of turning data into theories happens before we are five.

Since Aristotle and Plato, there have been two basic ways of addressing the problem of how we know what we know, and they are still the main approaches in machine learning. Aristotle approached the problem from the bottom up: Start with senses—the stream of photons and air vibrations (or the pixels or sound samples of a digital image or recording)—and see if you can extract patterns from them. This approach was carried further by such classic associationists as philosophers David Hume and J. S. Mill and later by behavioral psychologists, like Pavlov and B. F. Skinner. On this view, the abstractness and hierarchical structure of representations is something of an illusion, or at least an epiphenomenon. All the work can be done by association and pattern detection—especially if there are enough data.

Over time, there has been a seesaw between this bottom-up approach to the mystery of learning and Plato’s alternative, top-down one. Maybe we get abstract knowledge from concrete data because we already know a lot, and especially because we already have an array of basic abstract concepts, thanks to evolution. Like scientists, we can use those concepts to formulate hypotheses about the world. Then, instead of trying to extract patterns from the raw data, we can make predictions about what the data should look like if those hypotheses are right. Along with Plato, such “rationalist” philosophers and psychologists as Descartes and Noam Chomsky took this approach.

Here’s an everyday example that illustrates the difference between the two methods: solving the spam plague. The data consist of a long, unsorted list of messages in your inbox. The reality is that some of these messages are genuine and some are spam. How can you use the data to discriminate between them?

Consider the bottom-up technique first. You notice that the spam messages tend to have particular features: a long list of addressees, origins in Nigeria, references to million-dollar prizes, or Viagra. The trouble is that perfectly useful messages might have these features, too. If you looked at enough examples of spam and nonspam emails, you might see not only that spam emails tend to have those features but that the features tend to go together in particular ways (Nigeria plus a million dollars spells trouble). In fact, there might be some subtle higher-level correlations that discriminate the spam messages from the useful ones— a particular pattern of misspellings and IP addresses, say. If you detect those patterns, you can filter out the spam.

The bottom-up machine-learning techniques do just this. The learner gets millions of examples, each with some set of features and each labeled as spam (or some other category) or not. The computer can extract the pattern of features that distinguishes the two, even if it’s quite subtle.

How about the top-down approach? I get an email from the editor of the Journal of Clinical Biology. It refers to one of my papers and says that they would like to publish an article by me. No Nigeria, no Viagra, no million dollars; the email doesn’t have any of the features of spam. But by using what I already know, and thinking in an abstract way about the process that produces spam, I can figure out that this email is suspicious:

1. I know that spammers try to extract money from people by appealing to human greed.

2. I also know that legitimate “open access” journals have started covering their costs by charging authors instead of subscribers, and that I don’t practice anything like clinical biology.

Put all that together and I can produce a good new hypothesis about where that email came from. It’s designed to sucker academics into paying to “publish” an article in a fake journal. The email was a result of the same dubious process as the other spam emails, even though it looked nothing like them. I can draw this conclusion from just one example, and I can go on to test my hypothesis further, beyond anything in the email itself, by googling the “editor.”

In computer terms, I started out with a “generative model” that includes abstract concepts like greed and deception and describes the process that produces email scams. That lets me recognize the classic Nigerian email spam, but it also lets me imagine many different kinds of possible spam. When I get the journal email, I can work backward: “This seems like just the kind of mail that would come out of a spam-generating process.”

The new excitement about AI comes because AI researchers have recently produced powerful and effective versions of both of these learning methods. But there is nothing profoundly new about the methods themselves.

Bottom-up Deep Learning

In the 1980s, computer scientists devised an ingenious way to get computers to detect patterns in data: connectionist, or neural-network, architecture (the “neural” part was, and still is, metaphorical). The approach fell into the doldrums in the 1990s but has recently been revived with powerful “deep-learning” methods like Google’s DeepMind.

For example, you can give a deep-learning program a bunch of Internet images labeled “cat,” others labeled “house,” and so on. The program can detect the patterns differentiating the two sets of images and use that information to label new images correctly. Some kinds of machine learning, called unsupervised learning, can detect patterns in data with no labels at all; they simply look for clusters of features—what scientists call a factor analysis. In the deep-learning machines, these processes are repeated at different levels. Some programs can even discover relevant features from the raw data of pixels or sounds; the computer might begin by detecting the patterns in the raw image that correspond to edges and lines and then find the patterns in those patterns that correspond to faces, and so on.

Another bottom-up technique with a long history is reinforcement learning. In the 1950s, B. F. Skinner, building on the work of John Watson, famously programmed pigeons to perform elaborate actions—even guiding air-launched missiles to their targets (a disturbing echo of recent AI) by giving them a particular schedule of rewards and punishments. The essential idea was that actions that were rewarded would be repeated and those that were punished would not, until the desired behavior was achieved. Even in Skinner’s day, this simple process, repeated over and over, could lead to complex behavior. Computers are designed to perform simple operations over and over on a scale that dwarfs human imagination, and computational systems can learn remarkably complex skills in this way.

For example, researchers at Google’s DeepMind used a combination of deep learning and reinforcement learning to teach a computer to play Atari video games. The computer knew nothing about how the games worked. It began by acting randomly and got information only about what the screen looked like at each moment and how well it had scored. Deep learning helped interpret the features on the screen, and reinforcement learning rewarded the system for higher scores. The computer got very good at playing several of the games, but it also completely bombed on others that were just as easy for humans to master.

A similar combination of deep learning and reinforcement learning has enabled the success of DeepMind’s AlphaZero, a program that managed to beat human players at both chess and Go, equipped with only a basic knowledge of the rules of the game and some planning capacities. AlphaZero has another interesting feature: It works by playing hundreds of millions of games against itself. As it does so, it prunes mistakes that led to losses, and it repeats and elaborates on strategies that led to wins. Such systems, and others involving techniques called generative adversarial networks, generate data as well as observing data.

When you have the computational power to apply those techniques to very large data sets or millions of email messages, Instagram images, or voice recordings, you can solve problems that seemed very difficult before. That’s the source of much of the excitement in computer science. But it’s worth remembering that those problems—like recognizing that an image is a cat or a spoken word is Siri—are trivial for a human toddler. One of the most interesting discoveries of computer science is that problems that are easy for us (like identifying cats) are hard for computers—much harder than playing chess or Go. Computers need millions of examples to categorize objects that we can categorize with just a few. These bottom-up systems can generalize to new examples; they can label a new image as a cat fairly accurately over all. But they do so in ways quite different from how humans generalize. Some images almost identical to a cat image won’t be identified by us as cats at all. Others that look like a random blur will be.

Top-Down Bayesian Models

The top-down approach played a big role in early AI, and in the 2000s it, too, experienced a revival, in the form of probabilistic, or Bayesian, generative models.

The early attempts to use this approach faced two kinds of problems. First, most patterns of evidence might in principle be explained by many different hypotheses: It’s possible that my journal email message is genuine, it just doesn’t seem likely. Second, where do the concepts that the generative models use come from in the first place? Plato and Chomsky said you were born with them. But how can we explain how we learn the latest concepts of science? Or how even young children understand about dinosaurs and rocket ships?

Bayesian models combine generative models and hypothesis testing with probability theory, and they address these two problems. A Bayesian model lets you calculate just how likely it is that a particular hypothesis is true, given the data. And by making small but systematic tweaks to the models we already have, and testing them against the data, we can sometimes make new concepts and models from old ones. But these advantages are offset by other problems. The Bayesian techniques can help you choose which of two hypotheses is more likely, but there are almost always an enormous number of possible hypotheses, and no system can efficiently consider them all. How do you decide which hypotheses are worth testing in the first place?

Brenden Lake at NYU and colleagues have used these kinds of top-down methods to solve another problem that’s easy for people but extremely difficult for computers: recognizing unfamiliar handwritten characters. Look at a character on a Japanese scroll. Even if you’ve never seen it before, you can probably tell if it’s similar to or different from a character on another Japanese scroll. You can probably draw it and even design a fake Japanese character based on the one you see—one that will look quite different from a Korean or Russian character.

The bottom-up method for recognizing handwritten characters is to give the computer thousands of examples of each one and let it pull out the salient features. Instead, Lake et al. gave the program a general model of how you draw a character: A stroke goes either right or left; after you finish one, you start another; and so on. When the program saw a particular character, it could infer the sequence of strokes that were most likely to have led to it—just as I inferred that the spam pro- cess led to my dubious email. Then it could judge whether a new character was likely to result from that sequence or from a different one, and it could produce a similar set of strokes itself. The program worked much better than a deep-learning program applied to exactly the same data, and it closely mirrored the performance of human beings.

These two approaches to machine learning have complementary strengths and weaknesses. In the bottom-up approach, the program doesn’t need much knowledge to begin with, but it needs a great deal of data, and it can generalize only in a limited way. In the top-down approach, the program can learn from just a few examples and make much broader and more varied generalizations, but you need to build much more into it to begin with. A number of investigators are currently trying to combine the two approaches, using deep learning to implement Bayesian inference.

The recent success of AI is partly the result of extensions of those old ideas. But it has more to do with the fact that, thanks to the Internet, we have much more data, and thanks to Moore’s Law we have much more computational power to apply to that data. Moreover, an unappreciated fact is that the data we do have has already been sorted and processed by human beings. The cat pictures posted to the Web are canonical cat pictures—pictures that humans have already chosen as “good” pictures. Google Translate works because it takes advantage of millions of human translations and generalizes them to a new piece of text, rather than genuinely understanding the sentences themselves.

But the truly remarkable thing about human children is that they somehow combine the best features of each approach and then go way beyond them. Over the past fifteen years, developmentalists have been exploring the way children learn structure from data. Four-year-olds can learn by taking just one or two examples of data, as a top-down system does, and generalizing to very different concepts. But they can also learn new concepts and models from the data itself, as a bottom-up system does.

For example, in our lab we give young children a “blicket detector”—a new machine to figure out, one they’ve never seen before. It’s a box that lights up and plays music when you put certain objects on it but not others. We give children just one or two examples of how the machine works, showing them that, say, two red blocks make it go, while a green-and-yellow combination doesn’t. Even eighteen-month-olds immediately figure out the general principle that the two objects have to be the same to make it go, and they generalize that principle to new examples: For instance, they will choose two objects that have the same shape to make the machine work. In other experiments, we’ve shown that children can even figure out that some hidden invisible property makes the machine go, or that the machine works on some abstract logical principle.

You can show this in children’s everyday learning, too. Young children rapidly learn abstract intuitive theories of biology, physics, and psychology in much the way adult scientists do, even with relatively little data.

The remarkable machine-learning accomplishments of the recent AI systems, both bottom-up and top-down, take place in a narrow and well-defined space of hypotheses and concepts—a precise set of game pieces and moves, a predetermined set of images. In contrast, children and scientists alike sometimes change their concepts in radical ways, performing paradigm shifts rather than simply tweaking the concepts they already have.

Four-year-olds can immediately recognize cats and understand words, but they can also make creative and surprising new inferences that go far beyond their experience. My own grandson recently explained, for example, that if an adult wants to become a child again, he should try not eating any healthy vegetables, since healthy vegetables make a child grow into an adult. This kind of hypothesis, a plausible one that no grown-up would ever entertain, is characteristic of young children. In fact, my colleagues and I have shown systematically that preschoolers are better at coming up with unlikely hypotheses than older children and adults. We have almost no idea how this kind of creative learning and innovation is possible.

Looking at what children do, though, may give programmers useful hints about directions for computer learning. Two features of children’s learning are especially striking. Children are active learners; they don’t just passively soak up data like AIs do. Just as scientists experiment, children are intrinsically motivated to extract information from the world around them through their endless play and exploration. Recent studies show that this exploration is more systematic than it looks and is well adapted to find persuasive evidence to support hypothesis formation and theory choice. Building curiosity into machines and allowing them to actively interact with the world might be a route to more realistic and wide-ranging learning.

Second, children, unlike existing AIs, are social and cultural learners. Humans don’t learn in isolation but avail themselves of the accumulated wisdom of past generations. Recent studies show that even preschoolers learn through imitation and by listening to the testimony of others. But they don’t simply passively obey their teachers. Instead they take in information from others in a remarkably subtle and sensitive way, making complex inferences about where the information comes from and how trustworthy it is and systematically integrating their own experiences with what they are hearing.

“Artificial intelligence” and “machine learning” sound scary. And in some ways they are. These systems are being used to control weapons, for example, and we really should be scared about that. Still, natural stupidity can wreak far more havoc than artificial intelligence; we humans will need to be much smarter than we have been in the past to properly regulate the new technologies. But there is not much basis for either the apocalyptic or the utopian vision of AIs replacing humans. Until we solve the basic paradox of learning, the best artificial intelligences will be unable to compete with the average human four-year-old.

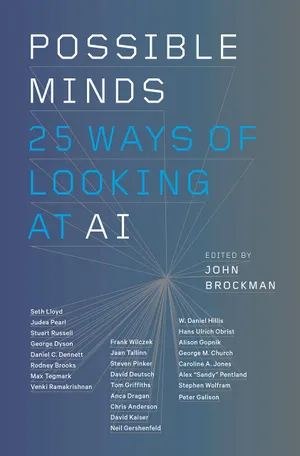

From the forthcoming collection POSSIBLE MINDS: 25 Ways of Looking at AI, edited by John Brockman. Published by arrangement with Penguin Press, a member of Penguin Random House LLC. Copyright © 2019 John Brockman.