Brain Implant Device Allows People With Speech Impairments to Communicate With Their Minds

A new brain-computer interface translates neurological signals into complete sentences

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/02/4b/024b4192-6d66-48e7-8997-03763abe3e46/demo_video_still_1b.jpg)

With advances in electronics and neuroscience, researchers have been able to achieve remarkable things with brain implant devices, such as restoring a semblance of sight to the blind. In addition to restoring physical senses, scientists are also seeking innovative ways to facilitate communication for those who have lost the ability to speak. A new “decoder” receiving data from electrodes implanted inside the skull, for example, might help paralyzed patients speak using only their minds.

Researchers from the University of California, San Francisco (UCSF) developed a two-stage method to turn brain signals into computer-synthesized speech. Their results, published this week in scientific journal Nature, provide a possible path toward more fluid communication for people who have lost the ability to speak.

For years, scientists have been trying to harness neural inputs to give a voice back to people whose neurological damage prevents them from talking—like stroke survivors or ALS patients. Until now, many of these brain-computer interfaces have featured a letter-by-letter approach, in which patients move their eyes or facial muscles to spell out their thoughts. (Stephen Hawking famously directed his speech synthesizer through small movements in his cheek.)

But these types of interfaces are sluggish—most max out producing 10 words per minute, a fraction of humans’ average speaking speed of 150 words per minute. For quicker and more fluid communication, UCSF researchers used deep learning algorithms to turn neural signals into spoken sentences.

“The brain is intact in these patients, but the neurons—the pathways that lead to your arms, or your mouth, or your legs—are broken down. These people have high cognitive functioning and abilities, but they cannot accomplish daily tasks like moving about or saying anything,” says Gopala Anumanchipalli, co-lead author of the new study and an associate researcher specializing in neurological surgery at UCSF. “We are essentially bypassing the pathway that's broken down.”

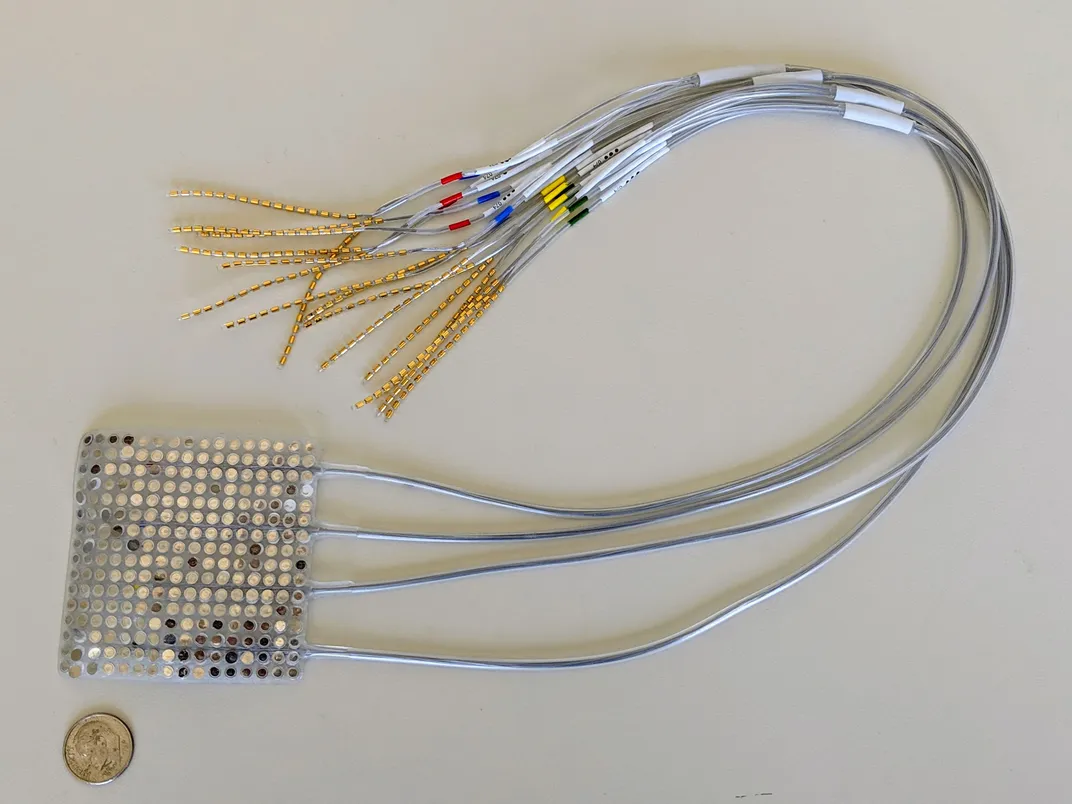

The researchers started with high-resolution brain activity data collected from five volunteers over several years. These participants—all of whom had normal speech function—were already undergoing a monitoring process for epilepsy treatment that involved implanting electrodes directly into their brains. Chang’s team used these electrodes to track activity in speech-related areas of the brain as the patients read off hundreds of sentences.

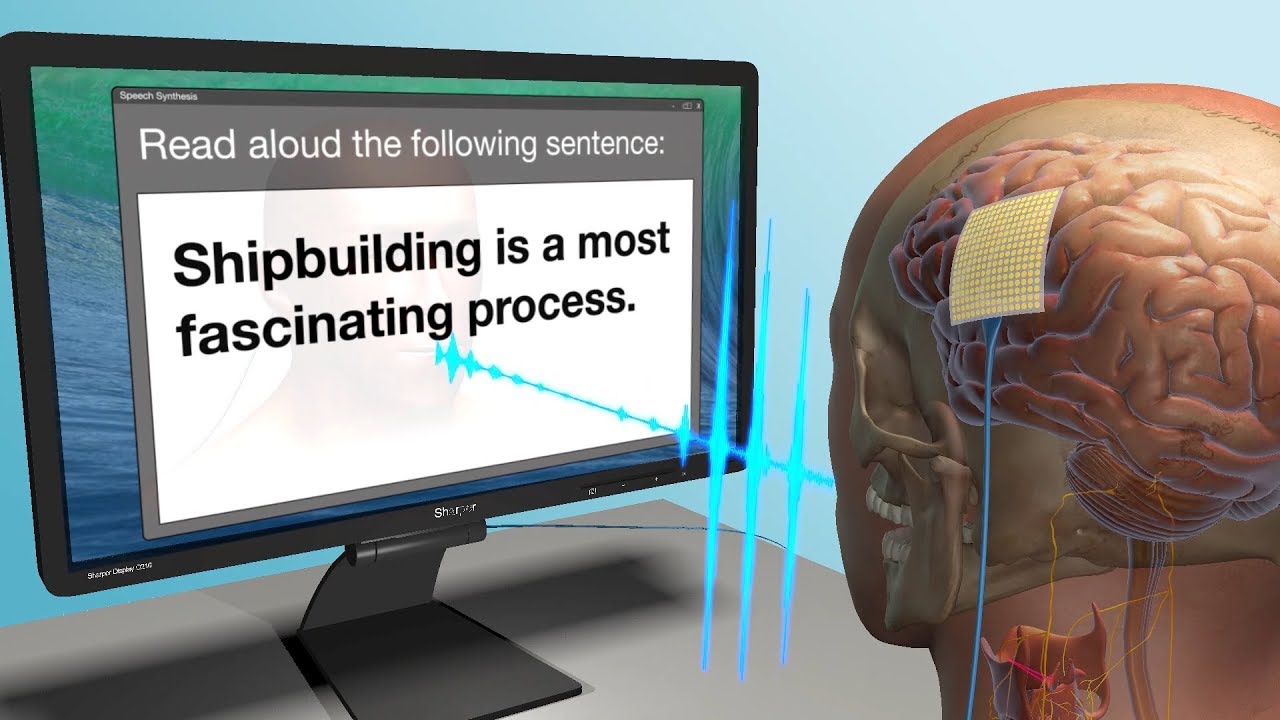

From there, the UCSF team worked out a two-stage process to recreate the spoken sentences. First, they created a decoder to interpret the recorded brain activity patterns as instructions for moving parts of a virtual vocal tract (including the lips, tongue, jaw and larynx). They then developed a synthesizer that used the virtual movements to produce language.

Other research has tried to decode words and sounds directly from neural signals, skipping the middle step of decoding movement. However, a study the UCSF researchers published last year suggests that your brain’s speech center focuses on how to move the vocal tract to produce sounds, rather than what the resulting sounds will be.

“The patterns of brain activity in the speech centers are specifically geared toward coordinating the movements of the vocal tract, and only indirectly linked to the speech sounds themselves,” Edward Chang, a professor of neurological surgery at UCSF and coauthor of the new paper, said in a press briefing this week. “We are explicitly trying to decode movements in order to create sounds, as opposed to directly decoding the sounds.”

Using this method, the researchers successfully reverse-engineered words and sentences from brain activity that roughly matched the audio recordings of participants’ speech. When they asked volunteers on an online crowdsourcing platform to attempt to identify the words and transcribe sentences using a word bank, many of them could understand the simulated speech, though their accuracy was far from perfect. Out of 101 synthesized sentences, about 80 percent were perfectly transcribed by at least one listener using a 25-word bank (that rate dropped to about 60 percent when the word bank size doubled).

It’s hard to say how these results compare to other synthesized speech trials, Marc Slutzky, a Northwestern neurologist who was not involved in the new study, says in an email. Slutzky recently worked on a similar study that produced synthesized words directly from cerebral cortex signals, without decoding vocal tract movement, and he believes the resulting speech quality was similar—though differences in performance metrics make it hard to compare directly.

One exciting aspect of the UCSF study, however, is that the decoder can generalize some results across participants, Slutzky says. A major challenge for this type of research is that training the decoder algorithms usually requires participants to speak, but the technology is intended for patients who can no longer talk. Being able to generalize some of the algorithm’s training could allow further work with paralyzed patients.

To address this challenge, the researchers also tested the device with a participant who silently mimed the sentences instead of speaking them out loud. Though the resulting sentences weren’t as accurate, the authors say the fact that synthesis was possible even without vocalized speech has exciting implications.

“It was really remarkable to find that we could still generate an audio signal from an act that did not generate audio at all,” Josh Chartier, a co-lead author on the study and bioengineering graduate student at UCSF, said in the press briefing.

Another goal for future research is to pursue real-time demonstrations of the decoder, Anumanchipalli says. The current study was meant as a proof of concept—the decoder was developed separately from the data collection process, and the team didn’t test the real-time speed of translating brain activity to synthesized speech, although this would be the eventual goal of a clinical device.

That real-time synthesis is something that needs improvement for such a device to be useful in the future, says Jaimie Henderson, a Stanford neurosurgeon who was not involved in the study. Still, he says the authors’ two-stage method is an exciting new approach, and the use of deep learning technology may provide new insights into how speech really works.

“To me, just the idea of beginning to investigate the underlying basis of how speech is produced in people is very exciting,” Henderson says. “[This study] begins to explore one of our most human capabilities at a fundamental level.”

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/Maddie_3.jpg)

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/Maddie_3.jpg)