Brains Make Decisions the Way Alan Turing Cracked Codes

A mathematical tool developed during World War II operates in a similar way to brains weighing the reliability of information

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/ac/d0/acd0ee64-94b9-42cf-85c4-034a6e05f64a/42-17281025.jpg)

Despite the events depicted in The Imitation Game, Alan Turing did not invent the machine that cracked Germany’s codes during World War II—Poland did. But the brilliant mathematician did invent something never mentioned in the film: a mathematical tool for judging the reliability of information. His tool sped up the work of deciphering encoded messages using improved versions of the Polish machines.

Now researchers studying rhesus monkeys have found that the brain also uses this mathematical tool, not for decoding messages, but for piecing together unreliable evidence to make simple decisions. For Columbia University neuroscientist Michael Shadlen and his team, the finding supports a larger idea that all the decisions we make—even seemingly irrational ones—can be broken down into rational stastical operations. “We think the brain is fundamentally rational,” says Shadlen.

Invented in 1918, the German Enigma machine created a substitution cipher by swapping the original letters in a message for new ones, producing what seemed like pure gibberish. To make the cipher more complicated, the device had rotating disks inside that swiveled each time a key was pressed, changing the encoding with each keystroke. The process was so complex that even with an Enigma machine in hand, the Germans could decipher a message only by knowing the initial settings of those encryption dials.

Turing created an algorithm that cut down the number of possible settings the British decryption machines, called bombes, had to test each day. Working at the secret Bletchley Park facility in the U.K., Turning realized that it was possible to figure out if two messages had come from machines with rotors that started in the same positions—a key piece of information for figuring out those positions. Line up two encoded messages, one on top of the other, and the chance that any two letters will be the same is slightly greater if both messages came from machines with the same initial settings. This is because in German, as in English, certain letters tend to be more common, and the encryption process preserved this pattern.

Turing’s algorithm essentially added up the probabilities of those clues being useful. It also indicated when the cumulative odds were good enough to either accept or reject that the two messages being compared came from machines with the same rotor states. This statistical tool, called the sequential probability ratio test, proved to be the optimal solution to the problem. It saved time by allowing the Bletchley codebreakers to decide whether two messages were useful while looking at the fewest number of letters possible. Turning wasn’t the only mathematician working in secret to come up with this idea. Abraham Wald at Columbia University used it in 1943 to figure out how many bombs the US Navy needed to blow up to be reasonably certain that a batch of munitions wasn’t defective before shipping it out.

Now Shadlen has found that humans and other animals might use a similar strategy to make sense of uncertain information. Dealing with uncertainty is important, because few decisions are based on perfectly reliable evidence. Imagine driving down a winding street at night in the rain. You must choose whether to turn the wheel left or right. But how much can you trust the faint tail lights of a car an unknown distance ahead, the dark tree line with its confusing shape or the barely visible lane markers? How do you put this information together to stay on the road?

Monkeys in Shadlen’s lab faced a similarly difficult decision. They saw two dots displayed on a computer monitor and tried to win a treat by picking the correct one. Shapes that flashed on the screen one after another hinted at the answer. When a Pac-Man symbol appeared, for instance, the left dot was probably, but not certainly, the correct answer. By contrast, a pentagon favored the right dot. The game ended when a monkey decided it had seen enough shapes to hazard a guess by turning its eyes toward one of the dots.

There are many strategies that could have been used to pick the correct dot. A monkey could pay attention to only the best clues and ignore the others. Or a choice could simply be made after certain amount of time, regardless of how certain a monkey was about the evidence it had seen up to that point.

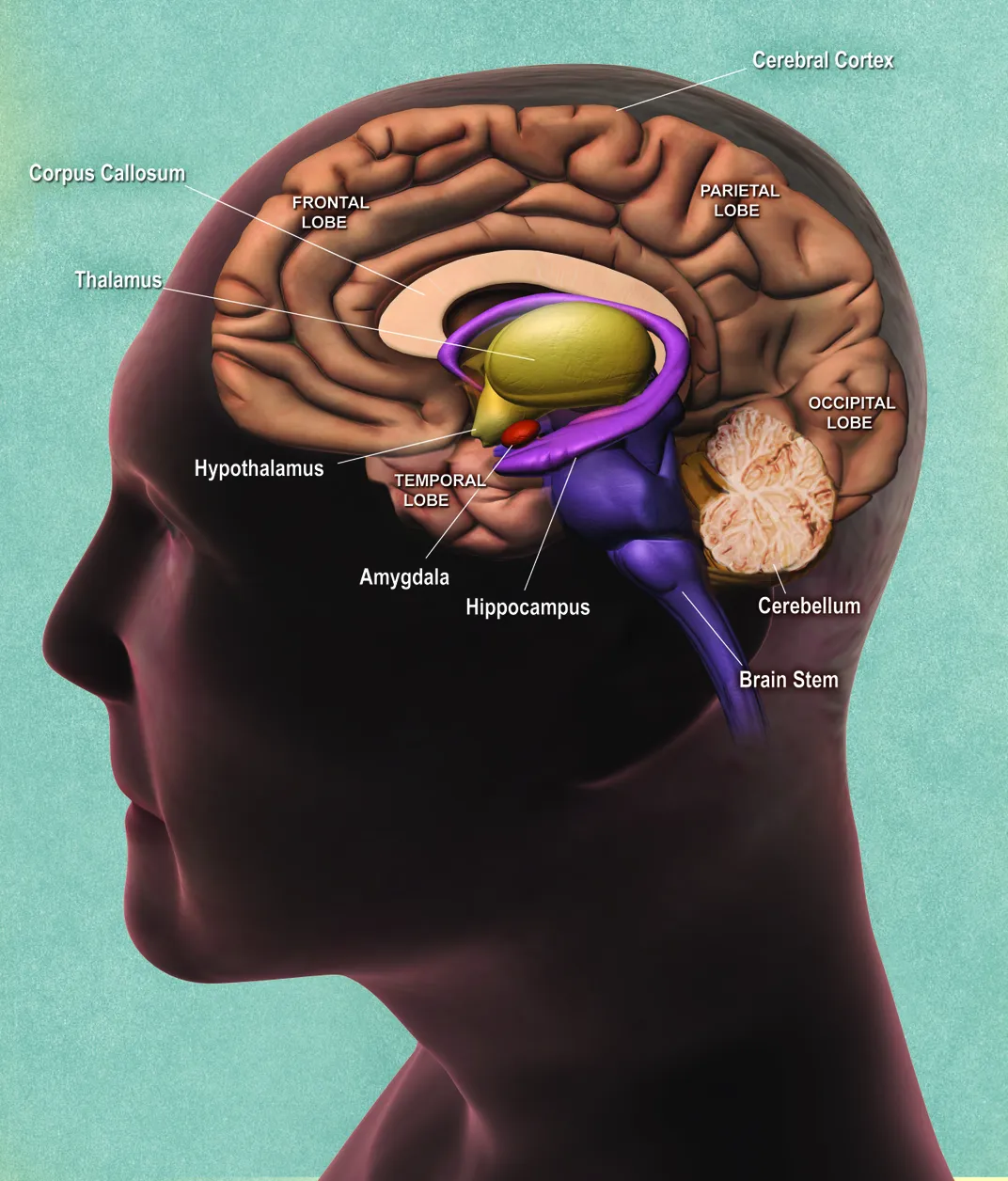

What actually happened was an accumulation of information in the brain, as the animal assessed the reliability of each shape and added them up to a running total. Shadlen monitored this buildup by painlessly inserting electrodes into the monkeys’ brains. High-probability clues triggered big leaps in brain activity, while weaker clues yielded smaller leaps. Decisions seemed to be made when activity in favor of either left or right crossed a certain threshold—much like the results from the Turing algorithm.

“We found that the brain reaches a decision in a way that would pass muster with a statistician,” says Shadlen, whose team will publish the results in an upcoming issue of the journal Neuron.

Jan Drugowitsch, a neuroscientist at the Ecole Normale Supérieure in Paris, agrees. “This makes a very strong case that the brain really does try to follow the strategy outlined here,” he says. But can more complicated choices, such as where to go to college or whom to marry, be boiled down to simple statistical strategies?

“We don’t know that the challenges faced by the brain in solving big issues are exactly the same as the challenges in simpler decisions,” says Joshua Gold, a neuroscientist at the University of Pennsylvania School of Medicine. “Right now it’s pure conjecture that the mechanisms we study in the lab bear on higher-level decisions.”