How Your Brain Recognizes All Those Faces

Neurons home in on one section at a time, researchers report

:focal(419x205:420x206)/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/1a/3d/1a3db519-e03a-4852-9c5d-8679e7c2f104/original_and_pixelism.png)

Each time you scroll through Facebook, you’re exposed to dozens of faces—some familiar, some not. Yet with barely a glance, your brain assesses the features on those faces and fits them to the corresponding individual, often before you even have time to read who’s tagged or who posted the album. Research shows that many people recognize faces even if they forget other key details about a person, like their name or their job.

That makes sense: As highly social animals, humans need to be able to quickly and easily identify each other by sight. But how exactly does this remarkable process work in the brain?

That was the question vexing Le Chang, a neuroscientist at the California Institute of Technology, in 2014. In prior research, his lab director had already identified neurons in the brains of primates that processed and recognized faces. These six areas in the brain's temporal lobe, called "face patches," contain specific neurons that appear to be much more active when a person or monkey is looking at a face than other objects.

"But I realized there was a big question missing," Chang says. That is: how the patches recognize faces. "People still [didn't] know the exact code of faces for these neurons."

In search of the method the brain uses to analyze and recognize faces, Chang decided to break down the face mathematically. He created nearly 2,000 artificial human faces and broke down their component parts by categories encompassing 50 characteristics that make faces different, from skin color to amount of space between the eyes. They he implanted electrodes into two rhesus monkeys to record how the neurons in their brain’s face patches fired when they were shown the artificial faces.

By then showing the monkeys thousands of faces, Chang was able to map which neurons fired in relation to which features were on each face, he reports in a study published this month in the journal Cell.

It turned out that each neuron in the face patches responded in certain proportions to only one feature or "dimension" of what makes faces different. This means that, as far as your neurons are concerned, a face is a sum of separate parts, as opposed to a single structure. Chang notes he was able to create faces that appeared extremely different but produced the same patterns of neural firing because they shared key features.

This method of face recognition stands in contrast to what some neuroscientists previously thought about how humans recognize faces. Previously, there were two opposing theories: “exemplar coding” and “norm coding.” For the exemplar coding theory, neuroscientists proposed that the brain recognized faces by comparing facial features to extreme or distinct examples of them, while the norm coding theory proposed that the brain was analyzing how a face’s features differed from an “average face.”

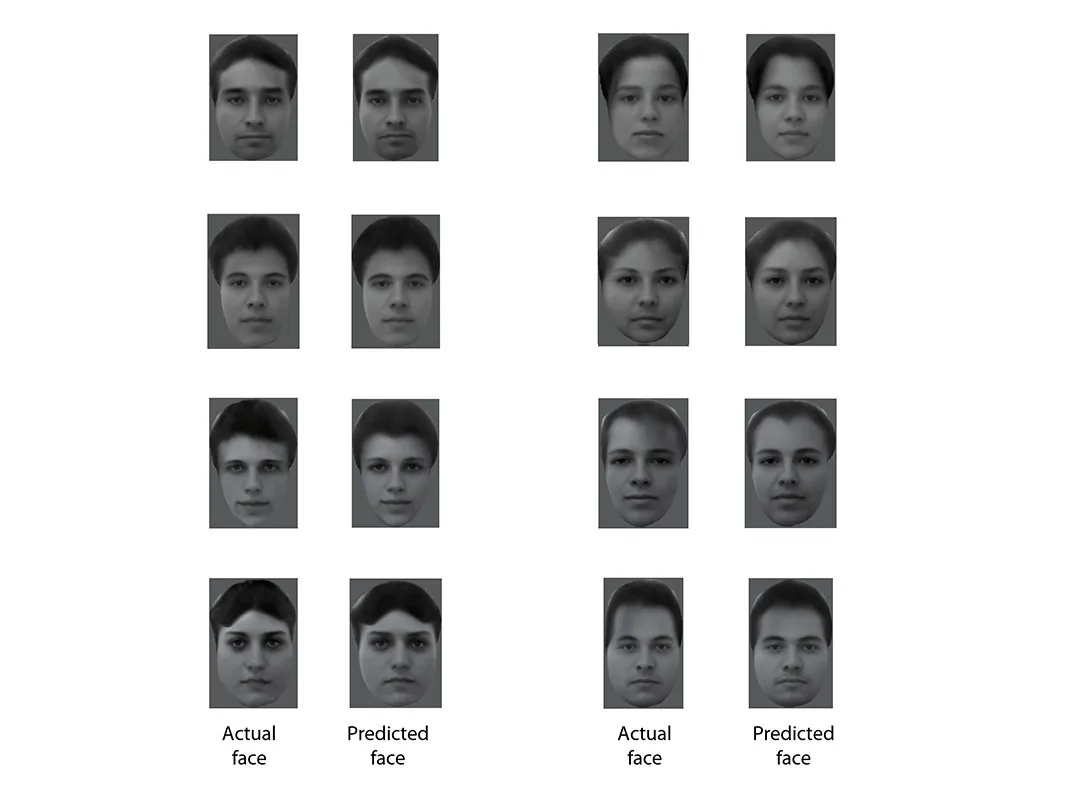

Understanding this pattern of neural firing allowed Chang to create an algorithm by which he could actually reverse engineer the patterns of just 205 neurons firing as the monkey looked at a face to create what faces the monkey was seeing without even knowing what face the monkey was seeing. Like a police sketch artist working with a person to combine facial features, he was able to take the features suggested by the activity of each individual neuron and combine them into a complete face. In nearly 70 percent of cases, humans drawn from the crowdsourcing website Amazon Turk matched the original face and the recreated face as being the same.

"People always say a picture is worth a thousand words," co-author neuroscientist Doris Tsao said in a press release. "But I like to say that a picture of a face is worth about 200 neurons."

Bevil Conway, a neuroscientist at the National Eye Institute, said the new study impressed him.

"It provides a principled account for how face recognition comes about, using data from real neurons," says Conway, who was not involved in the study. He added that such work can help us develop better facial recognition technologies, which are currently notoriously flawed. Sometimes the result is laughable, but at other times the algorithms these programs rely on have been found to have serious racial biases.

In the future, Chang sees his work as potentially being used in police investigations to profile potential criminals from witnesses who saw them. Ed Connor, a neuroscientist at Johns Hopkins University, envisions software that could be developed to adjust features based on these 50 characteristics. Such a program, he says, could allow witnesses and police to fine-tune faces based on the characteristics humans use to distinguish them, like a system of 50 dials that witnesses could turn to morph faces into the once they remember most.

"Instead of people describing what others look like," Chang speculates, "we could actually directly decode their thoughts."

“The authors deserve kudos for helping to drive this important area forward,” says Jim DiCarlo, a biomedical engineer at MIT who researches object recognition in primates. However, DiCarlo, who was not involved in the study, thinks that the researchers don’t adequately prove that just 200 neurons are needed to discriminate between faces. In his research, he notes, he’s found that it takes roughly 50,000 neurons to distinguish objects in a more realistic way, but still less realistic than faces in the real world.

Based on that work, DiCarlo estimates that recognizing faces would require somewhere between 2,000 and 20,000 neurons even to distinguish them at a rough quality. “If the authors believe that faces are encoded by nearly three orders of magnitude less neurons, that would be remarkable,” he says.

“Overall, this work is a nice addition to the existing literature with some great analyses,” DiCarlo concludes, “but our field is still not yet at a complete, model-based understanding of the neural code for faces.”

Connor, who also wasn't involved in the new research, hopes this study will inspire new research among neuroscientists. Too often, he says, this branch of science has dismissed the more complex workings of the brain as akin to the “black boxes” of computer deep neural networks: so messy as to be impossible to understand how they work.

“it’s hard to imagine anybody ever doing a better job of understanding how face identity is encoded in the brain,” says Connor of the new study. “It will encourage people to look for sometimes specific and complex neural codes.” He’s already discussed with Tsao the possibility of researching how the brain interprets facial expressions.

“Neuroscience never gets more interesting than when it is showing us what are the physical events in the brain that give rise to specific experiences,” Connor says. “To me, this is the Holy Grail.”