Robot Babies

Can scientists build a machine that learns as it goes and plays well with others?

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/Javier-Movellan-develops-robot-loves-humans-631.jpg)

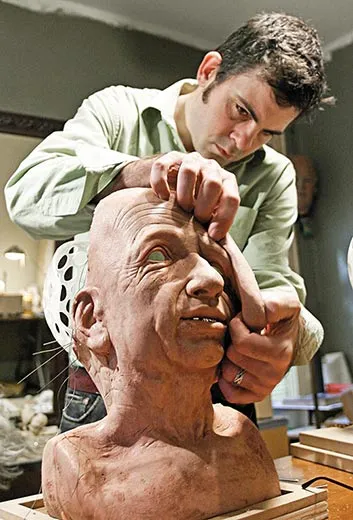

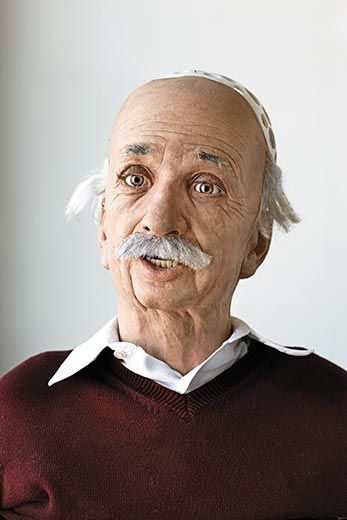

Einstein the robot has enchanting eyes, the color of honey in sunlight. They are fringed with drugstore-variety false eyelashes and framed by matted gray brows made from real human hair. "What is that, makeup?" a visiting engineer asks, and, indeed, on closer examination I can see black eyeliner smeared beneath Einstein's lower lids, à la David Bowie in 1971. The machine's gaze is expressive—soulful, almost.

David Hanson, Einstein's creator, is visiting from Texas to help scientists here at the University of California at San Diego (UCSD) prepare the robot for an upcoming conference. Hanson switches the robot on—really just a head and neck—and runs it through some of its dozens of expressions. Its lips purse. Its brow furrows. Its eyes widen as though in horror, then scrunch mirthfully as it flashes a grin.The 27 motors in the face make a wretched grinding sound, and when the mouth opens, I see a tangle of wires where the prodigious brain should be. Einstein's white wig is missing and the skin of its neck hangs in flaps, because its shoulders, made of plastic, got shattered in shipping.

Still, the effect is so lifelike that even jaded graduate students have stopped by to stroke the robot's wrinkled cheek, which is encased in a substance called Frubber—"face rubber," Hanson explains—that feels buttery soft and cold as a cadaver. Engineers working at night have been so unnerved by Einstein's presence they asked that it be covered up.

That delights Javier Movellan, leader of the university group that recently spent $70,000 in research grants to buy the robotic head from Hanson. Einstein will eventually be able to track people's movements with cameras that twinkle behind its pupils and judge their gender, age (well, whether they're over or under 40), if they wear glasses or have facial hair and when they're smiling. The robot is also equipped with conversational software and can hold forth while staring deep into a person's eyes. "Do you know any jokes?" Hanson asks the machine.

Einstein: Why did the chicken cross the road?

Hanson: Tell me about artificial intelligence.

Einstein: I feel real, not artificial.

Hanson: I want to hear more about the future of robotics.

Einstein: Robots will become ever more like animals and people. Robots will continue to get more amazing and cool.

Einstein is the product of a remarkable collaboration. Hanson, a robot designer and the founder of the Dallas-based firm Hanson Robotics, has used classical sculpting techniques to animate robotic likenesses of Philip K. Dick, author of Do Androids Dream of Electric Sheep? (the basis of the apocalyptic movie Blade Runner), his own wife (he had to use a male skull model, "which masculinized her a bit") and more than a dozen other people. Movellan, a psychologist and software pioneer who runs UCSD's Machine Perception Laboratory, develops technology that approximates human senses. Einstein is, at present, a research tool to explore how a machine can perceive and react to human facial expressions; that capacity could later have many practical applications in entertainment and education, alerting the robot teachers of the future, say, that their human pupils are daydreaming.

For the most part, though, the intelligence I perceived in Einstein—its intense eye contact, its articulate soliloquies—was an illusion. Its answers to questions were canned and its interpretive powers were extremely limited. In short, Einstein is no Einstein. Overall, robots can do amazing things—play the violin, dismantle bombs, fire missiles, diagnose diseases, tend tomato plants, dance—but they sorely lack the basics. They recite jokes but don't get them. They can't summarize a movie. They can't tie their shoelaces. Because of such shortcomings, whenever we encounter them in the flesh, or Frubber, as it were, they are bound to disappoint.

Rodney Brooks, an M.I.T. computer scientist who masterminded a series of robotics innovations in the 1990s, said recently that for a robot to have truly humanlike intelligence, it would need the object-recognition skills of a 2-year-old child, the language capabilities of a 4-year-old, the manual dexterity of a 6-year-old and the social understanding of an 8-year-old. Experts say they are far from reaching those goals. In fact, the problems that now confound robot programmers are puzzles that human infants often solve before their first birthday. How to reach for an object. How to identify a few individuals. How to tell a stuffed animal from a bottle of formula. In babies, these skills are not preprogrammed, as were the perceptual and conversational tricks Einstein showed me, but rather are cultivated through interactions with people and the environment.

But what if a robot could develop that way? What if a machine could learn like a child, as it goes along? Armed with a nearly $3 million National Science Foundation grant, Movellan is now tackling that very question, leading a team of cognitive scientists, engineers, developmental psychologists and roboticists from UCSD and beyond. Their experiment—called Project One, because it focuses on the first year of development—is a wildly ambitious effort to crack the secrets of human intelligence. It involves, their grant proposal says, "an integrated system...whose sensors and actuators approximate the levels of complexity of human infants."

In other words, a baby robot.

The word "Robot" hit the world stage in 1921, in the Czech science fiction writer Karel Capek's play Rossum's Universal Robots, about a factory that creates artificial people. The root is the Czech robota, for serf labor or drudgery. Broadly understood, a robot is a machine that can be programmed to interact with its surroundings, usually to do physical work.

We may associate robots with artificial intelligence, which uses powerful computers to solve big problems, but robots are not usually designed with such lofty aspirations; we might dream of Rosie, the chatty robot housekeeper on "The Jetsons," but for now we're stuck with Roomba, the disk-shaped, commercially available autonomous vacuum cleaner. The first industrial robot, called Unimate, was installed in a General Motors factory in 1961 to stack hot pieces of metal from a die-casting machine. Today, most of the world's estimated 6.5 million robots perform similarly mundane industrial jobs or domestic chores, though 2 million plug away at more whimsical tasks, like mixing cocktails. "Does [the robot] prepare the drink with style or dramatic flair?" ask the judging guidelines for the annual RoboGames bartending competition, held in San Francisco this summer. "Can it prepare more than a martini?"

Now imagine a bartender robot that could waggle its eyebrows sympathetically as you pour out the story of your messy divorce. Increasingly, the labor we want from robots involves social fluency, conversational skill and a convincing humanlike presence. Such machines, known as social robots, are on the horizon in health care, law enforcement, child care and entertainment, where they might work in concert with other robots and human supervisors. Someday, they might assist the blind; they've already coached dieters in an experiment in Boston. The South Korean government has said it aims to have a robot working in every home by 2020.

Part of the new emphasis on social functioning reflects the changing economies of the richest nations, where manufacturing has declined and service industries are increasingly important. Not coincidentally, societies with low birthrates and long life expectancies, notably Japan, are pushing hardest for social robots, which may be called upon to stand in for young people and perform a wide variety of jobs, including caring for and comforting the old.

Some scientists working on social robots, like Movellan and his team, borrow readily from developmental psychology. A machine might acquire skills as a human child does by starting with a few basic tasks and gradually constructing a more sophisticated competence—"bootstrapping," in scientific parlance. In contrast to preprogramming a robot to perform a fixed set of actions, endowing a robot computer with the capacity to acquire skills gradually in response to the environment might produce smarter, more human robots.

"If you want to build an intelligent system, you have to build a system that becomes intelligent," says Giulio Sandini, a bioengineer specializing in social robots at the Italian Institute of Technology in Genoa. "Intelligence is not only what you know but how you learn more from what you know. Intelligence is acquiring information, a dynamic process."

"This is the brains!" Movellan shouted over the din of cyclone-strength air conditioners. He was pointing at a stack of computers about ten feet tall and six feet deep, sporting dozens of blinking blue lights and a single ominous orange one. Because the Project One robot's metal cranium will not be able to hold all the information-processing hardware that it will need, the robot will be connected by fiber-optic cables to these computers in the basement of a building on the UCSD campus in La Jolla. The room, filled with towering computers that would overheat if the space weren't kept as cold as a meat locker, looks like something out of 2001: A Space Odyssey.

As Einstein could tell you, Movellan is over 40, bespectacled and beardless. But Einstein has no way of knowing that Movellan has bright eyes and a bulky chin, is the adoring father of an 11-year-old daughter and an 8-year-old son and speaks English with an accent reflecting his Spanish origins.

Movellan grew up amid the wheat fields of Palencia, Spain, the son of an apple farmer. Surrounded by animals, he spent endless hours wondering how their minds worked. "I asked my mother, 'Do dogs think? Do rats think?'" he says. "I was fascinated by things that think but have no language."

He also acquired a farm boy's knack for working with his hands; he recalls that his grandmother scolded him for dissecting her kitchen appliances. Enamored of the nameless robot from the 1960s television show "Lost in Space," he built his first humanoid when he was about 10, using "food cans, light bulbs and a tape recorder," he says. The robot, which had a money slot, would demand the equivalent of $100. As Movellan anticipated, people usually forked over much less. "That's not $100!" the robot's prerecorded voice would bellow. Ever the mischievous tinkerer, he drew fire 30 years later from his La Jolla homeowners association for welding robots in his garage.

He got his PhD in developmental psychology at the University of California at Berkeley in 1989 and moved on to Carnegie Mellon University, in Pittsburgh, to conduct artificial intelligence research. "The people I knew were not really working on social robots," he says. "They were working on vehicles to go to Mars. It didn't really appeal to me. I always felt robotics and psychology should be more together than they originally were." It was after he went to UCSD in 1992 that he began working on replicating human senses in machines.

A turning point came in 2002, when he was living with his family in Kyoto, Japan, and working in a government robotics lab to program a long-armed social robot named Robovie. He hadn't yet had much exposure to the latest social robots and initially found them somewhat annoying. "They would say things like, 'I'm lonely, please hug me,'" Movellan recalls. But the Japanese scientists warned him that Robovie was special. "They would say, 'you'll feel something.' Well, I dismissed it—until I felt something. The robot kept talking to me. The robot looked up at me and, for a moment, I swear this robot was alive."

Then Robovie enfolded him in a hug and suddenly—"magic," says Movellan. "This is something I was unprepared for from a scientific point of view. This intense feeling caught me off guard. I thought, Why is my brain put together so that this machine got me? Magic is when the robot is looking at things and you reflexively want to look in the same direction as the robot. When the robot is looking at you instead of through you. It's a feeling that comes and goes. We don't know how to make it happen. But we have all the ingredients to make it happen."

Eager to understand this curious reaction, Movellan introduced Robovie to his 2-year-old son's preschool class. But there the robot cast a different spell. "It was a big disaster," Movellan remembers, shaking his head. "It was horrible. It was one of the worst days of my life." The toddlers were terrified of Robovie, who was about the size of a 12-year-old. They ran away from it screaming.

That night, his son had a nightmare. Movellan heard him muttering Japanese in his sleep: "Kowai, kowai." Scary, scary.

Back in California, Movellan assembled, in consultation with his son, a kid-friendly robot named RUBI that was more appropriate for visits to toddler classrooms. It was an early version of the smiling little machine that stands sentinel in the laboratory today, wearing a jaunty orange Harley-Davidson bandanna and New Balance sneakers, its head swiveling in an inquisitive manner. It has coasters for eyes and a metal briefcase for a body that snaps open to reveal a bellyful of motors and wires.

"We have learned a lot from this little baby," Movellan said, giving the robot an affectionate pat on its square cheek.

For the past several years he has embedded RUBI at a university preschool to study how the toddlers respond. Various versions of RUBI (some of them autonomous and others puppeteered by humans) have performed different tasks. One taught vocabulary words. Another accompanied the class on nature walks. (That model was not a success; with its big wheels and powerful motors, RUBI swelled to an intimidating 300 pounds. The kids were wary, and Movellan was, too.)

The project has had its triumphs—the kids improved their vocabularies playing word games displayed on RUBI's stomach screen—but there have been setbacks. The children destroyed a fancy robotic arm that had taken Movellan and his students three months to build, and RUBI's face detector consistently confused Thomas the Tank Engine with a person. Programming in incremental fixes for these problems proved frustrating for the scientists. "To survive in a social environment, to sustain interaction with people, you can't possibly have everything preprogrammed," Movellan says.

Those magic moments when a machine seems to share in our reality can sometimes be achieved by brute computing force. For instance, Einstein's smile-detection system, a version of which is also used in some cameras, was shown tens of thousands of photographs of faces that had been marked "smiling" or "not smiling." After cataloging those images and discerning a pattern, Einstein's computer can "see" whether you are smiling, and to what degree. When its voice software is cued to compliment your pretty smile or ask why you look sad, you might feel a spark of unexpected emotion.

But this laborious analysis of spoon-fed data—called "supervised learning"—is nothing like the way human babies actually learn. "When you're little nobody points out ten thousand faces and says 'This is happy, this is not happy, this is the left eye, this is the right eye,'" said Nicholas Butko, a PhD student in Movellan's group. (As an undergraduate, he was sentenced to labeling a seemingly infinite number of photographs for a computer face-recognition system.) Yet babies are somehow able to glean what a human face is, what a smile signifies and that a certain pattern of light and shadow is Mommy.

To show me how the Project One robot might learn like an infant, Butko introduced me to Bev, actually BEV, as in Baby's Eye View. I had seen Bev slumped on a shelf above Butko's desk without realizing that the Toys 'R' Us-bought baby doll was a primitive robot. Then I noticed the camera planted in the middle of Bev's forehead, like a third eye, and the microphone and speaker under its purple T-shirt, which read, "Have Fun."

In one experiment, the robot was programmed to monitor noise in a room that people periodically entered. They'd been taught to interact with the robot, which was tethered to a laptop. Every now and then, Bev emitted a babylike cry. Whenever someone made a sound in response, the robot's camera snapped a picture. The robot sometimes took a picture if it heard no sound in response to its cry, whether or not there was a person in the room. The robot processed those images and quickly discerned that some pictures—usually those taken when it heard a response—included objects (faces and bodies) not present in other pictures. Although the robot had previously been given no information about human beings (not even that such things existed), it learned within six minutes how to tell when someone was in the room. In a remarkably short time, Bev had "discovered" people.

A similar process of "unsupervised learning" is at the heart of Project One. But Project One's robot will be much more physically sophisticated than Bev—it will be able to move its limbs, train its cameras on "interesting" stimuli and receive readings from sensors throughout its body—which will enable it to borrow more behavior strategies from real infants, such as how to communicate with a caregiver. For example, Project One researchers plan to study human babies playing peekaboo and other games with their mothers in a lab. Millisecond by millisecond, the researchers will analyze the babies' movements and reactions. This data will be used to develop theories and eventually programs to engineer similar behaviors in the robot.

It's even harder than it sounds; playing peekaboo requires a relatively nuanced understanding of "others." "We know it's a hell of a problem," says Movellan. "This is the kind of intelligence we're absolutely baffled by. What's amazing is that infants effortlessly solve it." In children, such learning is mediated by the countless connections that brain cells, or neurons, form with one another. In the Project One robot and others, the software itself is formulated to mimic "neural networks" like those in the brain, and the theory is that the robot will be able to learn new things virtually on its own.

The robot baby will be able to touch, grab and shake objects, and the researchers hope that it will be able to "discover" as many as 100 different objects that infants might encounter, from toys to caregivers' hands, and figure out how to manipulate them. The subtleties are numerous; it will need to figure out that, say, a red rattle and a red bottle are different things and that a red rattle and a blue rattle are essentially the same.The researchers also want the robot to learn to crawl and ultimately walk.

Perhaps the team's grandest goal is to give the robot the capacity to signal for a caregiver to retrieve an object beyond its grasp. Movellan calls this the "Vygotsky reach," after developmental psychologist Lev Vygotsky, who identified the movement—which typically occurs when a child is about a year old—as an intellectual breakthrough, a transition from simple sensory-motor intelligence to symbolic intelligence. If the scientists are successful, it will be the first spontaneous symbolic gesture by a robot. It will also be a curious role reversal—the robot commanding the human, instead of vice versa.

"That's a pretty important transition," says Jonathan Plucker, a cognitive scientist at Indiana University who studies human intelligence and creativity. Plucker had no prior knowledge of Project One and its goals, but he was fresh from watching the season finale of "Battlestar Galactica," which had left him leery of the quest to build intelligent robots. "My sense is that it wouldn't be hard to have a robot that reaches for certain types of objects," he says, "but it's a big leap to have a machine that realizes it wants to reach for something and uses another object, a caregiver, as a tool. That is a much, much more complex psychological process."

At present, the Project One robot is all brains. While the big computer hums in its air-conditioned cavern, the body is being designed and assembled in a factory in Japan.

Construction is expected to take about nine months.

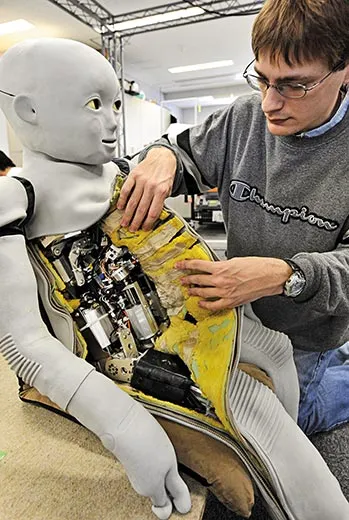

A prototype of the Project One robot body already exists, in the Osaka laboratory of Hiroshi Ishiguro, the legendary Japanese roboticist who, in addition to creating Robovie, fashioned a robotic double of himself, named Geminoid, as well as a mechanical twin of his 4-year-old daughter, which he calls "my daughter's copy." ("My daughter didn't like my daughter's copy," he told me over the phone. "Its movement was very like a zombie." Upon seeing it, his daughter—the original—cried.) Ishiguro's baby robot is called the Child-Robot with Biomimetic Body, or CB2 for short. If you search for "creepy robot baby" on YouTube, you can see clips of four-foot-tall CB2 in action. Its silicone skin has a grayish cast; its blank, black eyes dart back and forth. When first unveiled in 2007, it could do little more than writhe, albeit in a very babylike way, and make pathetic vowel sounds out of the tube of silicone that is its throat.

"It has this ghostly gaze," says Ian Fasel, a University of Arizona computer scientist and a former student of Movellan's who has worked on the Japanese project. "My friends who see it tell me to please put it out of its misery. It was often lying on the floor of the lab, flopping around. It gives you this feeling that it's struggling to be a real boy, but it doesn't know how."

When Movellan first saw CB2, last fall, as he was shopping around for a Project One body, he was dismayed by the lack of progress the Japanese scientists had made in getting it to move in a purposeful way. "My first impression was that there was no way we would choose that robot," Movellan recalls. "Maybe this robot is impossible to control. If you were God himself, could you control it?"

Still, he couldn't deny that the CB2 was an exquisite piece of engineering. There have been other explicitly childlike robots over the years—creations such as Babybot and Infanoid—but none approach CB2's level of realism. Its skin is packed with sensors to collect data. Its metal skeleton and piston-driven muscles are limber, like a person's, not stiff like most robots', and highly interconnected: if an arm moves, motors in the torso and elsewhere respond. In the end, Movellan chose CB2.

The body's human-ness would help the scientists develop more brainlike software, Movellan decided. "We could have chosen a robot that could already do a lot of the things we want it to do—use a standard robotic arm, for instance," Movellan says. "Yet we felt it was a good experiment in learning to control a more biologically inspired body that approximates how muscles work. Starting with an arm more like a real arm is going to teach us more."

The Project One team has requested tweaks in CB2's design, to build in more powerful muscles that Movellan hopes will give it the strength to walk on its own, which the Japanese scientists—who are busy developing a new model of their own—now realize the first CB2 will never do. Movellan is also doing away with the skin suit, which sometimes provides muddled readings, opting instead for a Terminator-like metal skeleton encased in clear plastic. ("You can always put clothes on," Movellan reasons.) He had hoped to make the robot small enough to cradle, but the Japanese designers told him that is currently impossible. The baby will arrive standing about three feet tall and weighing 150 pounds.

What a social robot's face should look like is a critical, and surprisingly difficult, decision. CB2's face is intended to be androgynous and abstract, but somehow it has tumbled into what robotics experts term the "uncanny valley," where a machine looks just human enough to be unsettling. The iCub, another precocious child-inspired robot being built by a pan-European team, looks more appealing, with cartoonish wide eyes and an endearing expression. "We told the designers to make it look like someone who needed help," says the Italian Institute of Technology's Sandini, who's leading the project. "Someone...a little sad."

When I met Movellan he seemed flummoxed by the matter of his robot's facial appearance: Should the features be skeletal or soft-tissue, like Einstein's? He was also pondering whether it would be male or female. "All my robots so far have been girls—my daughter has insisted," he explains. "Maybe it's time for a boy." Later, he and his co-workers asked Hanson to help design a face for the Project One robot, which will be named Diego. The "developmental android" will be modeled after a real child, the chubby-cheeked nephew of a researcher in Movellan's lab.

Though Movellan believes that a human infant is born with very little pre-existing knowledge, even he says it comes with needs: to be fed, warmed, napped and relieved of a dirty diaper. Those would have to be programmed into the robot, which quickly gets complicated. "Will this robot need to evacuate?" says John Watson, a University of California at Berkeley professor emeritus of psychology who is a Project One consultant. "Will the thing need sleep cycles? We don't know."

Others outside the project are skeptical that baby robots will reveal much about human learning, if only because a human grows physically as well as cognitively. "To mimic infant development, robots are going to have to change their morphology in ways that the technology isn't up to," says Ron Chrisley, a cognitive scientist at the University of Sussex in England. He says realistic human features are usually little more than clever distractions: scientists should focus on more basic models that teach us about the nature of intelligence. Human beings learned to fly, Chrisley notes, when we mastered aerodynamics, not when we fashioned realistic-looking birds. A socially capable robot might not resemble a human being anymore than an airplane looks like a sparrow.

Maybe the real magic of big-eyed, round-faced robobabies is their ability to manipulate our own brains, says Hamid Ekbia, a cognitive science professor at Indiana University and the author of Artificial Dreams: The Quest for Non-Biological Intelligence. Infantalized facial features, he says, primarily tap into our attraction to cute kids. "These robots say more about us than they do about machines," says Ekbia. "When people interact with these robots, they get fascinated, but they read beneath the surface. They attribute qualities to the robot that it doesn't have. This is our disposition as human beings: to read more than there is."

Of course, Movellan would counter that such fascination is, in Project One's case, quite essential: to develop like a real child, the machine must be treated like one.

Each Project One researcher defines success differently. Some will declare victory if the robot learns to crawl or to identify basic objects. Watson says he would be grateful to simulate the first three months of development. Certainly, no one expects the robot to progress at the same rate as a child. Project One's timeline extends over four years, and it may take that long before the robot is exposed to people outside the lab—"caregivers" (read: undergrads) who will be paid to baby-sit. Lacking a nursery, the robot will be kept behind glass on a floor beneath Movellan's lab, accessible, for the time being, only to researchers.

As for Movellan, he hopes that the project will "change the way we see human development and bring a more computational bent to it, so we appreciate the problems the infant brain is solving." A more defined understanding of babies' brains might also give rise to new approaches to developmental disorders. "To change the questions that psychologists are asking—that to me is the dream," Movellan adds. "For now it is, how do you get its arm to work, the leg to work? But when we put the pieces together, things will really start to happen."

Before leaving the lab, I stop to bid goodbye to Einstein. All is not well with the robot. Its eye cameras have become obsessed with the glowing red exit sign over the workshop's door. Hanson switches the robot off and on; its movements are palsied; its eyes roll. Its German accent isn't working and the tinny-sounding conversational software seems to be on the fritz. Hanson peers into its eyes. "Hi there," he says. "Can you hear me? Are you listening?"

Einstein: (No response.)

Hanson: Let's get into the topic of compassion.

Einstein: I don't have good peripheral vision.

Einstein: (Continuing.) I am just a child. I have a lot to learn, like what it is to truly love.

Students working nearby are singing along to a radio blasting Tina Turner's "What's Love Got to Do With It," oblivious to Einstein's plight. For me, though, there is something almost uncomfortable about watching the robot malfunction, like seeing a stranger struggle with heavy suitcases. Does this count as magic?

On a worktable nearby, something catches my eye. It is a copy of a Renaissance-era portrait of Mary and the infant Jesus—Carlo Crivelli's Madonna con Bambino, the engineers say, which another robot in the room is using to practice analyzing images. The painting is the last thing I expect to see among the piles of tools and snarls of wires, but it occurs to me that building a humanoid robot is also a kind of virgin birth. The child in the painting is tiny but already standing on its own. Mary's eyes are downcast and appear troubled; the baby stretches one foot forward, as though to walk, and gazes up.

Staff writer Abigail Tucker last wrote for the magazine about narwhals.

This is San Francisco-based photographer Timothy Archibald's first assignment for Smithsonian.