When Did the Human Mind Evolve to What It is Today?

Archaeologists are finding signs of surprisingly sophisticated behavior in the ancient fossil record

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/Evolution-of-the-mind-cave-drawing-631.jpg)

Archaeologists excavating a cave on the coast of South Africa not long ago unearthed an unusual abalone shell. Inside was a rusty red substance. After analyzing the mixture and nearby stone grinding tools, the researchers realized they had found the world’s earliest known paint, made 100,000 years ago from charcoal, crushed animal bones, iron-rich rock and an unknown liquid. The abalone shell was a storage container—a prehistoric paint can.

The find revealed more than just the fact that people used paints so long ago. It provided a peek into the minds of early humans. Combining materials to create a product that doesn’t resemble the original ingredients and saving the concoction for later suggests people at the time were capable of abstract thinking, innovation and planning for the future.

These are among the mental abilities that many anthropologists say distinguished humans, Homo sapiens, from other hominids. Yet researchers have no agreed-upon definition of exactly what makes human cognition so special.

“It’s hard enough to tell what the cognitive abilities are of somebody who’s standing in front of you,” says Alison Brooks, an archaeologist at George Washington University and the Smithsonian Institution in Washington, D.C. “So it’s really hard to tell for someone who’s been dead for half a million years or a quarter million years.”

Since archaeologists can’t administer psychological tests to early humans, they have to examine artifacts left behind. When new technologies or ways of living appear in the archaeological record, anthropologists try to determine what sort of novel thinking was required to fashion a spear, say, or mix paint or collect shellfish. The past decade has been particularly fruitful for finding such evidence. And archaeologists are now piecing together the patterns of behavior recorded in the archaeological record of the past 200,000 years to reconstruct the trajectory of how and when humans started to think and act like modern people.

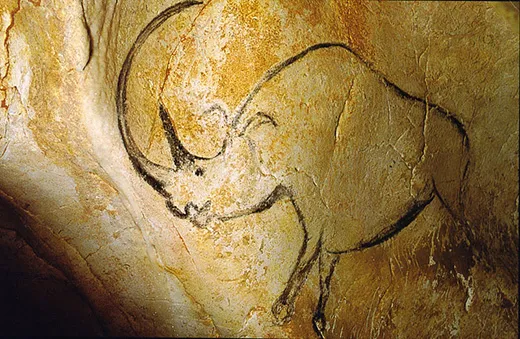

There was a time when they thought they had it all figured out. In the 1970s, the consensus was simple: Modern cognition evolved in Europe 40,000 years ago. That’s when cave art, jewelry and sculpted figurines all seemed to appear for the first time. The art was a sign that humans could use symbols to represent their world and themselves, archaeologists reasoned, and therefore probably had language, too. Neanderthals living nearby didn’t appear to make art, and thus symbolic thinking and language formed the dividing line between the two species’ mental abilities. (Today, archaeologists debate whether, and to what degree, Neanderthals were symbolic beings.)

One problem with this analysis was that the earliest fossils of modern humans came from Africa and dated to as many as 200,000 years ago—roughly 150,000 years before people were depicting bison and horses on cave walls in Spain. Richard Klein, a paleoanthropologist at Stanford University, suggested that a genetic mutation occurred 40,000 years ago and caused an abrupt revolution in the way people thought and behaved.

In the decades following, however, archaeologists working in Africa brought down the notion that there was a lag between when the human body evolved and when modern thinking emerged. “As researchers began to more intensely investigate regions outside of Europe, the evidence of symbolic behavior got older and older,” says archaeologist April Nowell of the University of Victoria in Canada.

For instance, artifacts recovered over the past decade in South Africa— such as pigments made from red ochre, perforated shell beads and ostrich shells engraved with geometric designs—have pushed back the origins of symbolic thinking to more than 70,000 years ago, and in some cases, to as early as 164,000 years ago. Now many anthropologists agree that modern cognition was probably in place when Homo sapiens emerged.

“It always made sense that the origins of modern human behavior, the full assembly of modern uniqueness, had to occur at the origin point of the lineage,” says Curtis Marean, a paleoanthropologist at Arizona State University in Tempe.

Marean thinks symbolic thinking was a crucial change in the evolution of the human mind. “When you have that, you have the ability to develop language. You have the ability to exchange recipes of technology,” he says. It also aided the formation of extended, long-distance social and trading networks, which other hominids such as Neanderthals lacked. These advances enabled humans to spread into new, more complex environments, such as coastal locales, and eventually across the entire planet. “The world was their oyster,” Marean says.

But symbolic thinking may not account for all of the changes in the human mind, says Thomas Wynn, an archaeologist at the University of Colorado. Wynn and his colleague, University of Colorado psychologist Frederick Coolidge, suggest that advanced "working memory" was the final critical step toward modern cognition.

Working memory allows the brain to retrieve, process and hold in mind several chunks of information all at one time to complete a task. A particularly sophisticated kind of working memory “involves the ability to hold something in attention while you’re being distracted,” Wynn says. In some ways, it’s kind of like multitasking. And it’s needed in problem solving, strategizing, innovating and planning. In chess, for example, the brain has to keep track of the pieces on the board, anticipate the opponent’s next several steps and prepare (and remember) countermoves for each possible outcome.

Finding evidence of this kind of cognition is challenging because humans don’t use advanced working memory all that much. “It requires a lot of effort,” Wynn says. “If we don’t have to use it, we don’t.” Instead, during routine tasks, the brain is sort of on autopilot, like when you drive your car to work. You’re not really thinking about it. Based on frequency alone, behaviors requiring working memory are less likely to be preserved than common activities that don’t need it, such as making simple stone choppers and handaxes.

Yet there are artifacts that do seem to relate to advanced working memory. Making tools composed of separate pieces, like a hafted spear or a bow and arrow, are examples that date to more than 70,000 years ago. But the most convincing example may be animal traps, Wynn says. At South Africa’s Sibudu cave, Lyn Wadley, an archaeologist at the University of the Witwatersrand, has found clues that humans were hunting large numbers of small, and sometimes dangerous, forest animals, including bush pigs and diminutive antelopes called blue duikers. The only plausible way to capture such critters was with snares and traps.

With a trap, you have to think up a device that can snag and hold an animal and then return later to see whether it worked. “That’s the kind of thing working memory does for us,” Wynn says. “It allows us to work out those kinds of problems by holding the necessary information in mind.”

It may be too simple to say that symbolic thinking, language or working memory is the single thing that defines modern cognition, Marean says. And there still could be important components that haven’t yet been identified. What’s needed now, Wynn adds, is more experimental archaeology. He suggests bringing people into a psych lab to evaluate what cognitive processes are engaged when participants make and use the tools and technology of early humans.

Another area that needs more investigation is what happened after modern cognition evolved. The pattern in the archaeological record shows a gradual accumulation of new and more sophisticated behaviors, Brooks says. Making complex tools, moving into new environments, engaging in long distance trade and wearing personal adornments didn’t all show up at once at the dawn of modern thinking.

The appearance of a slow and steady buildup may just be a consequence of the quirks of preservation. Organic materials like wood often decompose without a trace, so some signs of behavior may be too ephemeral to find. It’s also hard to spot new behaviors until they become widely adopted, so archaeologists are unlikely to ever locate the earliest instances of novel ways of living.

Complex lifestyles might not have been needed early on in the history of Homo sapiens, even if humans were capable of sophisticated thinking. Sally McBrearty, an archaeologist at the University of Connecticut in Storrs, points out in the 2007 book Rethinking the Human Revolution that certain developments might have been spurred by the need to find additional resources as populations expanded. Hunting and gathering new types of food, such as blue duikers, required new technologies.

Some see a slow progression in the accumulation of knowledge, while others see modern behavior evolving in fits and starts. Archaeologist Franceso d’Errico of the University of Bordeaux in France suggests certain advances show up early in the archaeological record only to disappear for tens of thousands of years before these behaviors—for whatever reason—get permanently incorporated into the human repertoire about 40,000 years ago. “It’s probably due to climatic changes, environmental variability and population size,” d’Errico says.

He notes that several tool technologies and aspects of symbolic expression, such as pigments and engraved artifacts, seem to disappear after 70,000 years ago. The timing coincides with a global cold spell that made Africa drier. Populations probably dwindled and fragmented in response to the climate change. Innovations might have been lost in a prehistoric version of the Dark Ages. And various groups probably reacted in different ways depending on cultural variation, d’Errico says. “Some cultures for example are more open to innovation.”

Perhaps the best way to settle whether the buildup of modern behavior was steady or punctuated is to find more archaeological sites to fill in the gaps. There are only a handful of sites, for example, that cover the beginning of human history. “We need those [sites] that date between 125,000 and 250,000 years ago,” Marean says. “That’s really the sweet spot.”

Erin Wayman writes Smithsonian.com's Homind Hunting blog.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/science-erin-wyman-240.jpg)

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/science-erin-wyman-240.jpg)