Art Project Shows Racial Biases in Artificial Intelligence System

ImageNet Roulette reveals how little-explored classification methods are yielding ‘racist, misogynistic and cruel results’

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/22/4b/224b6383-e42b-4f8c-99f9-acd3118cad6d/7-training-humans-24.jpg)

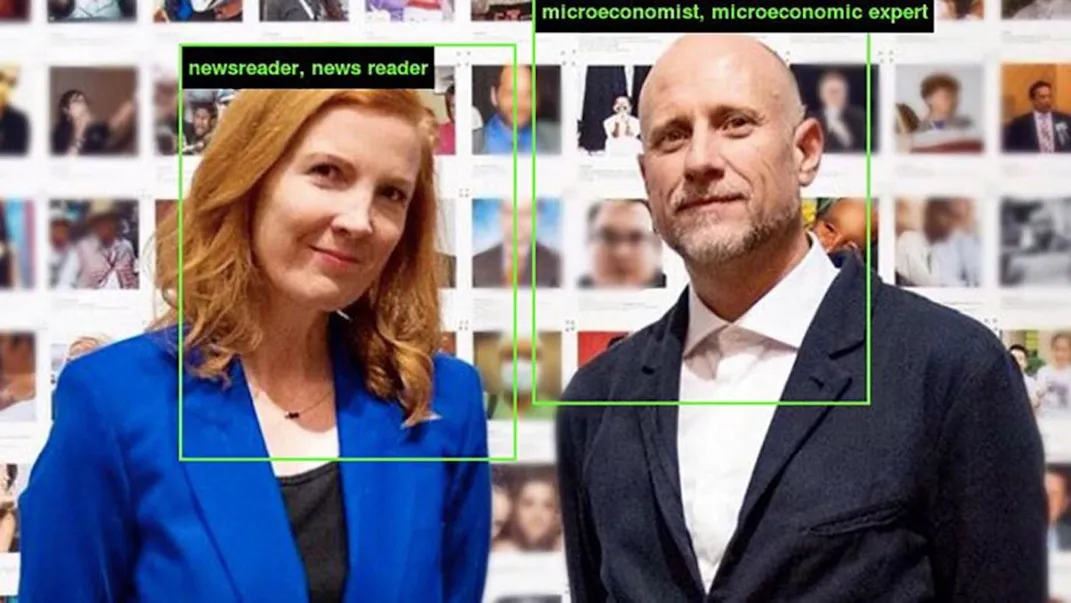

Some of the labels proposed by ImageNet Roulette—an artificial intelligence classification tool created by artist Trevor Paglen and A.I. researcher Kate Crawford—are logical. A photograph of John F. Kennedy, for example, yields a suggestion of “politician,” while a snapshot of broadcast journalist Diane Sawyer is identified as “newsreader.” But not all tags are equal. After Tabong Kima, 24, uploaded a photograph of himself and a friend to the portal, he noted that ImageNet Roulette labeled him as “wrongdoer, offender.”

“I might have a bad sense of humor,” Kima, who is African-American, wrote on Twitter, “but I don’t think this [is] particularly funny.”

Such “racist, misogynistic and cruel results” were exactly what Paglen and Crawford wanted to reveal with their tool.

“We want to show how layers of bias and racism and misogyny move from one system to the next,” Paglen tells the New York Times’ Cade Metz. “The point is to let people see the work that is being done behind the scenes, to see how we are being processed and categorized all the time.”

No matter what kind of image I upload, ImageNet Roulette, which categorizes people based on an AI that knows 2500 tags, only sees me as Black, Black African, Negroid or Negro.

— Lil Uzi Hurt (@lostblackboy) September 18, 2019

Some of the other possible tags, for example, are “Doctor,” “Parent” or “Handsome.” pic.twitter.com/wkjHPzl3kP

The duo’s project spotlighting artificial intelligence’s little-explored classification methods draws on more than 14 million photographs included in ImageNet, a database widely used to train artificial intelligence systems. Launched by researchers at Stanford University in 2009, the data set teaches A.I. to analyze and classify objects, from dogs to flowers and cars, as well as people. According to artnet News’ Naomi Rea, the labels used to teach A.I. were, in turn, supplied by lab staff and crowdsourced workers; by categorizing presented images in terms of race, gender, age and character, these individuals introduced “their own conscious and unconscious opinions and biases” into the algorithm.

Certain subsets outlined by ImageNet are relatively innocuous: for example, scuba diver, welder, Boy Scout, flower girl and hairdresser. Others—think bad person, adulteress, convict, pervert, spinster, jezebel and loser—are more charged. Many feature explicitly racist or misogynistic terms.

As Alex Johnson reports for NBC News, social media users noticed a recurring theme among ImageNet Roulette’s classifications: While the program identified white individuals largely in terms of occupation or other functional descriptors, it often classified those with darker skin solely by race. A man who uploaded multiple snapshots of himself in varying attire and settings was consistently labeled “black.” Another Twitter user who inputted a photograph of Democratic presidential candidates Andrew Yang and Joe Biden found that the former was erroneously identified as “Buddhist,” while the latter was simply deemed “grinner.”

“ImageNet is an object lesson, if you will, in what happens when people are categorized like objects,” Paglen and Crawford write in an essay accompanying the project.

Shortly after ImageNet Roulette went viral, the team behind the original database announced plans to remove 600,000 images featured in its “people” category. Per a statement, these pictures, representing more than half of all “people” photographs in the dataset, include those classified as “unsafe” (offensive regardless of context) or “sensitive” (potentially offensive depending on context).

Following ImageNet’s reversal, Paglen and Crawford said they welcomed the database’s “recognition of the problem” despite disagreeing on how to approach the issue moving forward.

“ImageNet Roulette has made its point,” they wrote, “... and so as of Friday, September 27th, 2019, we’re taking it off the internet.”

The tool will remain accessible as a physical art installation at Milan’s Fondazione Prada Osservertario through February 2020.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/mellon.png)

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/mellon.png)